Release

Today, we're excited to announce GLADOS-1, the first computer-use (CUA) model post-trained using collective, crowd-sourced trajectories.

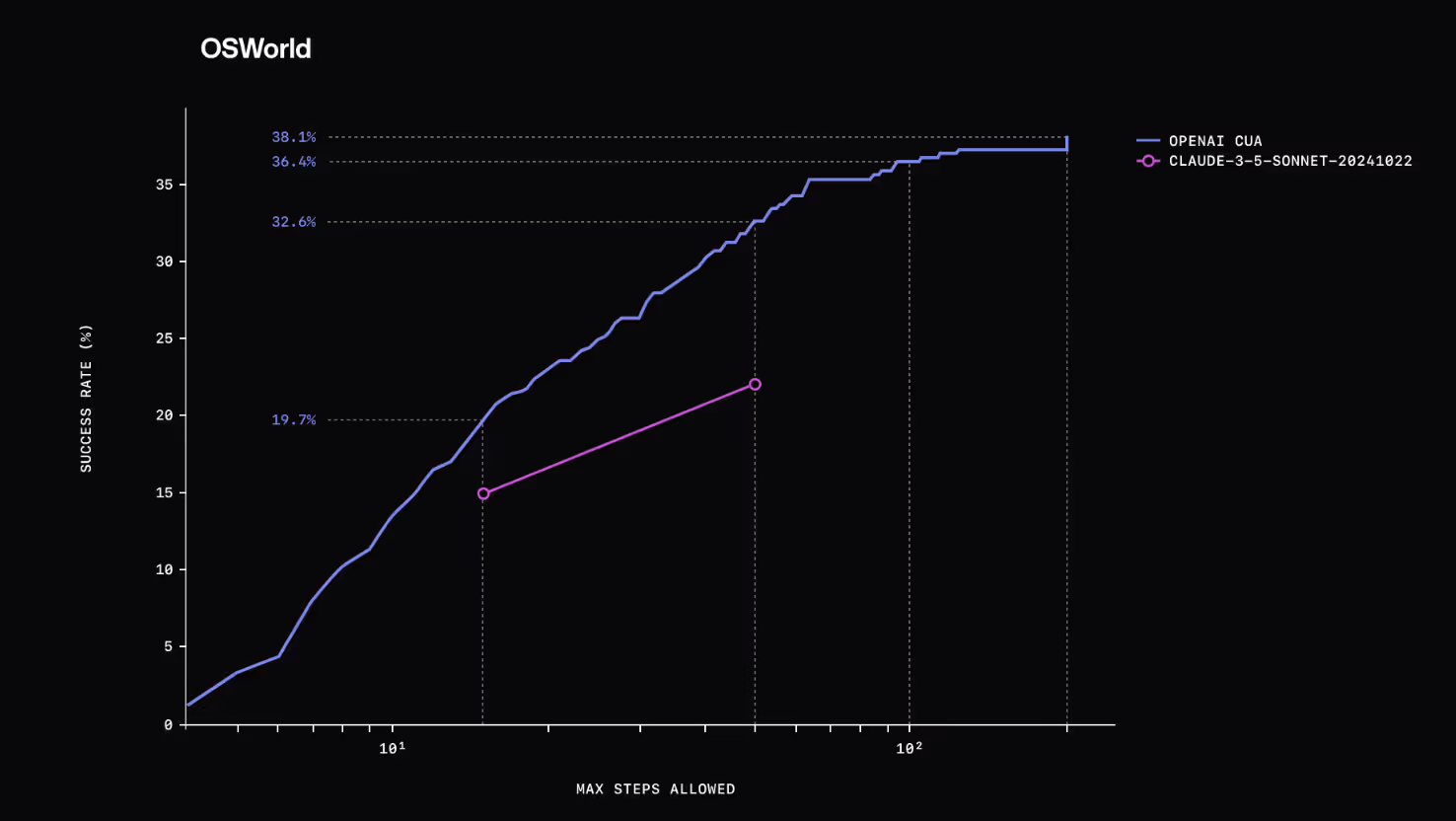

Post-trained on UI-TARS-7B-SFT, we improved performance of the base model on OSWorld.

Open-sourced code, model, and data samples are live.

Data Sample: https://huggingface.co/datasets/chakra-labs/pango-sample

Code: https://github.com/Chakra-Network/GLADOS-1

Model: https://huggingface.co/chakra-labs/GLADOS-1

Introduction

Computer Use Agents have seen an explosion in activity over the last 12 months. We cover their release and ascent in our last two blog posts and market map:

- Computer Use Agents Part I: Landscape, Training, Bottlenecks, and Pango

- Computer Use Agents Part II: Market Map & Ecosystem Analysis

- CUA Market Map

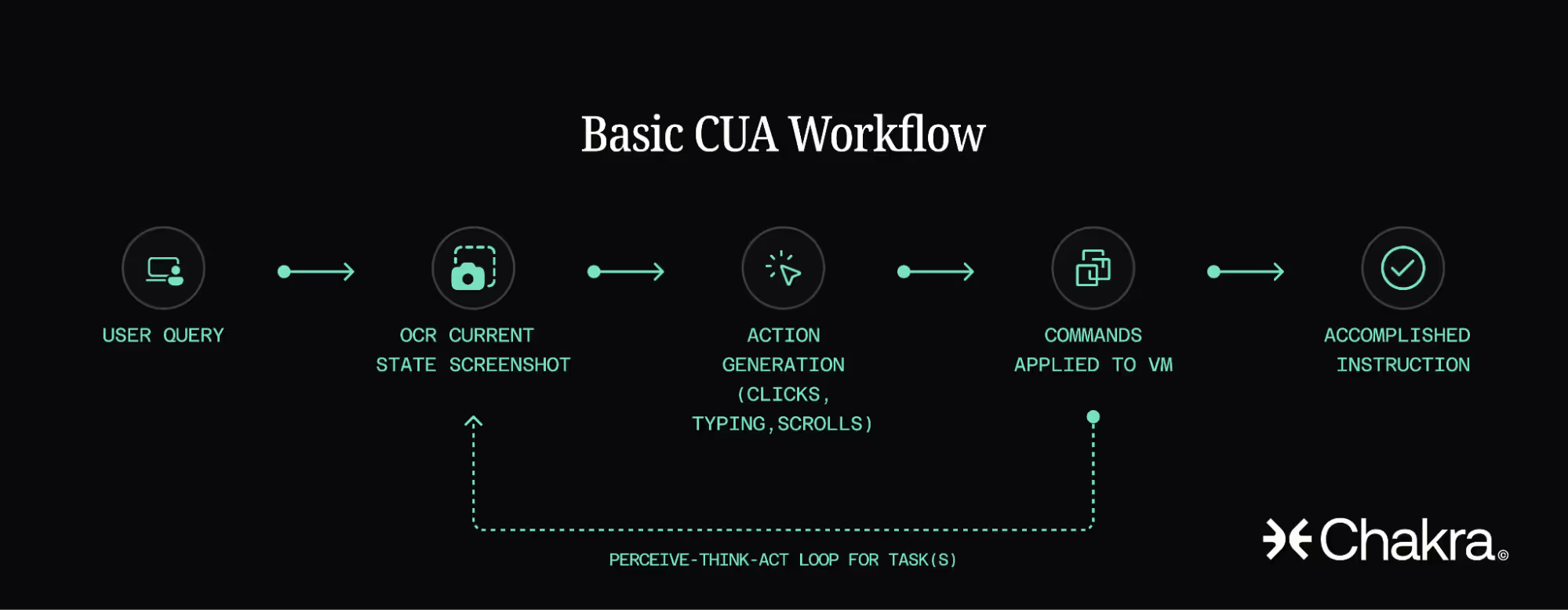

As a reminder, Computer Use Agents (CUAs) are AI systems designed to operate computers the way humans do: by seeing the interface, reasoning through tasks, and taking actions via keyboard and mouse.

The models have the opportunity to be extremely economically valuable, as 90% of human knowledge work happens on the computer. While powerful in concept, in practice these agents still struggle compared to human benchmarks. Top models have hit 43% on OSWorld, the industry standard benchmark, compared to 70% human performance.

Unlike text, which benefits from huge repositories of pre-training & post-training datasets like CommonCrawl, CUA data offerings remain paltry. Existing offerings rely on low quality synthetic data or limited expert demonstrations that are typically short or non-consequential.

We aimed to mitigate this problem with the PANGO (Productivity Applications with Natural GUI Observations & trajectories) and the work we share today reflects our work in bootstrapping enormous consequential datasets.

As it compares to today's highest quality open source dataset (Wang et al), the PANGO offering is roughly 6 times as big.

GLADOS-1

The model GLADOS-1 is inspired by the fictional GLaDOS from Portal. The character was an AI system designed to manage complex environments. Similarly, GLADOS-1 is engineered to navigate and control the digital environments where humans spend their working hours.

In this experiment, we selected UI-TARS-SFT-7B (a post-train of Qwen2VL) as our base model.

This model was selected for its existing context of visual grounding, experience in turn-based CUA interactions, and reasonable post-training size.

We constructed 3 different data formats for perception training:

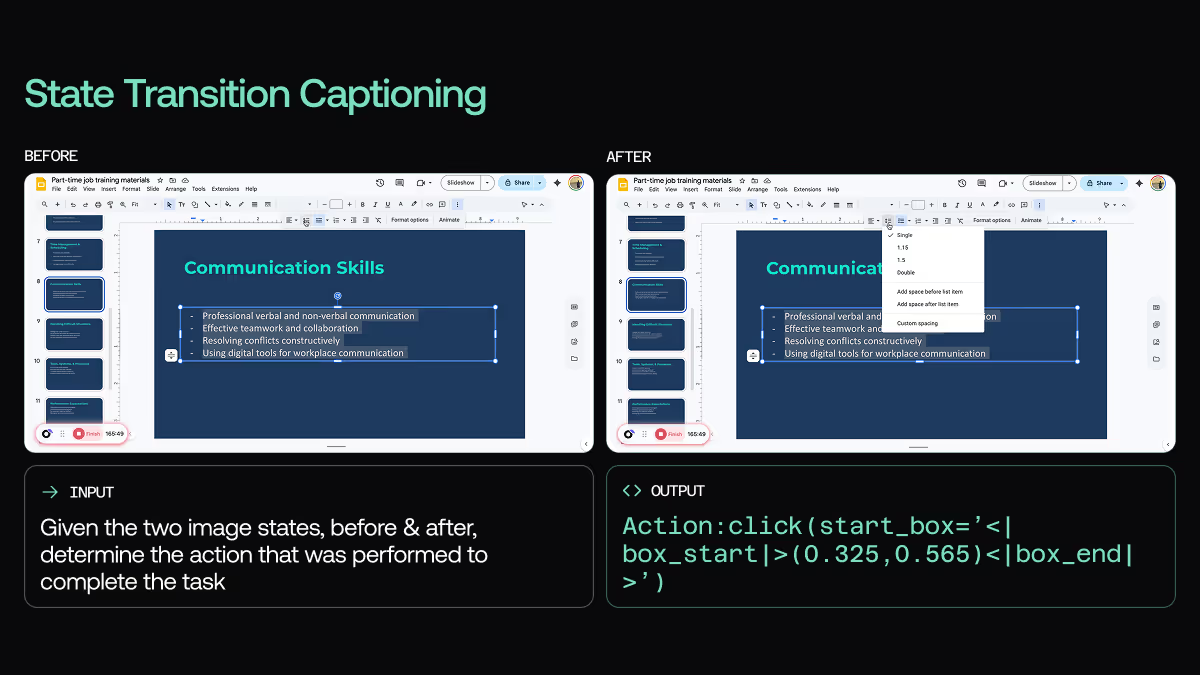

- State Transition Captioning

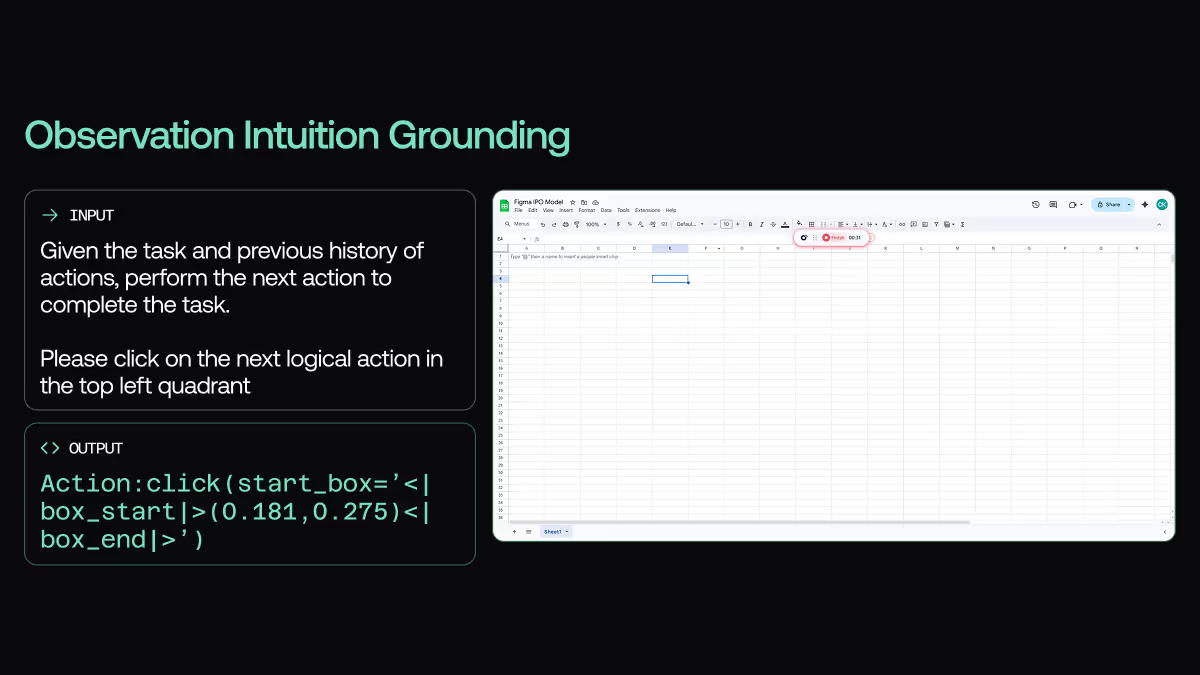

- Observation Intuition Grounding

- Sliding Window Behavior Cloning

(1) In State Transition Captioning, we assume a standard POMDP environment where the next state is determined by the current state and an action.

We train the model to predict what action occurred and at what coordinates between two sequential frames.

(2) In Observation Intuition Grounding, we provide the model a frame along with some guidance on where the next action should take place (top left corner, bottom right corner).

We train the model to predict the next action given the current frame and this semantic logical cue.

(3) In Sliding Window Behavior Cloning, we give the model 4 frames in a trajectory along with all the human actions that resulted in state transitions.

We train the model to predict the next action in the sequence given a logical task objective and frame history.

We perform curriculum learning, starting with easy tasks (1) and then move to more challenging tasks (2, 3) after a warm up.

In aggregate the data size across all 3 approaches represents ~10bn tokens.

We performed both standard SFT with Cross Entropy Loss as well as Online RL via GRPO. In the latter, reward was derived for (2) based on the difference between real and predicted coordinates.

Outcomes & Next Steps

In terms of performance, we meaningfully improved the base model's performance on OSWorld as well as its format compliance rates (generating well formatted actions that reduce steps to completion).

- Overall OSWorld Performance: 15% -> 17%

- Chrome Specific: 17% -> 26%

- Action Format Compliance Rates 39% -> 58%

In terms of next steps, first, we plan to continue scaling the PANGO data footprint. The current dataset was generated from a small minority of users onboarded from the waitlist.

Today, the dataset contains coverage on tasks like financial modeling, presentation design, document creation and design work. We plan to scale trajectories across many application surfaces as demand for diversity scales.

Second, online RL (PPO, GRPO) is becoming incredibly popular amongst our early customer base in CUA.

In the coming weeks, we plan to launch environments & verifiers based on the traces we collected from our SFT dataset and more.

Lastly, in future iterations we plan to take our larger dataset and online RL trainer and apply it to bigger, newer models like Qwen2.5-VL and the AutoGLM-OS-9B (Lai et al) when it becomes publicly available.

The lesson is clear - better datasets & environments results in better outcomes in CUA.

As computer use agents become increasingly important for automating digital workflows, we believe the path forward lies in combining sophisticated model architectures with diverse, real-world training data. GLADOS-1 represents our first major step in this direction, and we're excited to see how the community builds upon this foundation.

If you have a research interest in collaborating on the PANGO dataset, please reach out.