1. What Are Vector Databases?

Vector databases are specialized data stores designed to handle high-dimensional vector embeddings (i.e., numerical representations of data like text, images, or audio).

Unlike traditional relational databases that excel at exact matches and structured queries, vector databases enable similarity search based on the distance between vectors in a vector space.

In practical terms, this means finding data that is semantically similar (in meaning or content) rather than simply textually matching keywords, which is crucial for AI applications.

By efficiently storing and querying vector embeddings, vector databases power semantic search, recommendation systems, and anomaly detection.

Why is this so important now?

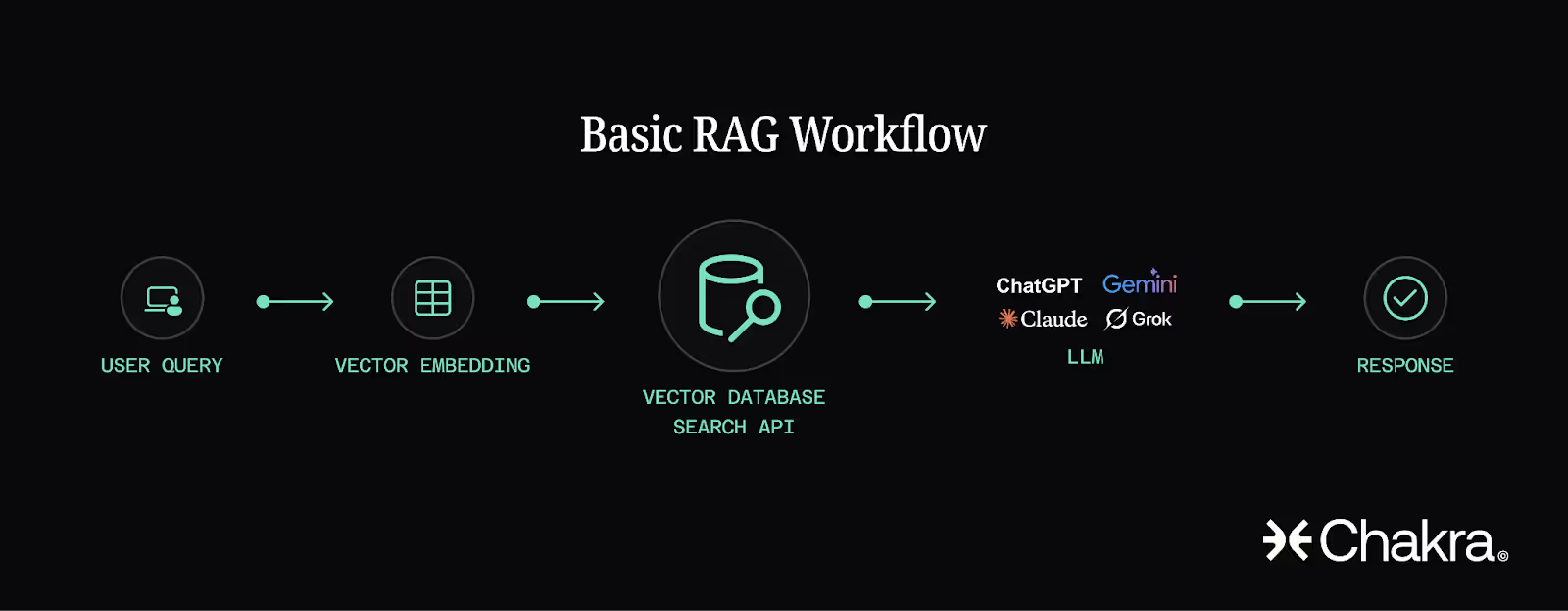

The rise of mainstream LLM use (and techniques like RAG) has made semantic search and near-real-time retrieval core parts of AI workflows.

In a RAG pipeline, a user query or document is converted into a vector embedding, which is then used to retrieve relevant information from a vector database. The retrieved context (documents, knowledge base entries, etc.) is fed into an LLM to produce a grounded answer. This allows AI systems to stay up-to-date, factual, and domain-aware without retraining models.

Vector databases provide the speed and scale to make this feasible, allowing a model to search millions of embeddings in milliseconds to find relevant context.

We’ve covered agentic RAG workflows in depth here.

Beyond RAG, many AI-powered features rely on similarity search. Semantic search engines find results based on meaning, recommendation systems compute nearest-neighbor items for personalization, and anomaly detection systems find outliers by distance in embedding space.

Traditional databases weren’t optimized for these vector operations, so a new breed of databases has emerged to fill the gap.

Vector databases are a critical infrastructure layer for AI. They act as the “memory” or knowledge base for AI applications, allowing you to store vector embeddings and query them in semantically meaningful ways.

Choosing the right vector database has a significant implication on your application’s performance, scalability, and capabilities.

2. Major Players in the Vector DB Landscape

Here, we’ll aim to compare six of the leading vector databases:

- Pinecone

- Weaviate

- Milvus (and its cloud service Zilliz)

- Qdrant

- Chroma

- PgVector (Postgres with the pgvector extension).

Each takes a slightly different approach, from fully managed services to open-source libraries, and each has its own strengths and trade-offs. Before we dive into how to choose, let’s briefly introduce each solution and what makes it unique.

.avif)

2.1: Pinecone

Managed, Scalable Vector Search as a Service

Pinecone is a fully managed, cloud-native vector database known for its scalability and developer-friendly service. Launched in 2019, Pinecone pioneered “vector database as a service,” allowing teams to offload all the infrastructure complexity. You simply load your vectors and query via Pinecone’s API.

Behind the scenes, it handles sharding, indexing, and scaling to billions of vectors with high performance. Pinecone’s architecture cleanly separates storage and compute, which lets it maintain fast query times even as your dataset grows into the billions. In practice, Pinecone can index data in real-time and scale automatically without manual tuning.

Because it’s fully managed, Pinecone excels for enterprises or production apps where reliability and low operational overhead are paramount. There’s no need to manage servers or tune index parameters. A developer can get a simple API, client libraries in languages like Python and JavaScript, and Pinecone takes care of the rest.

Pinecone offers advanced features like hybrid search (combining keyword filtering with vector similarity) and metadata filtering, which are useful for many applications. Developers integrate Pinecone with popular AI frameworks (e.g., it’s supported in LangChain out-of-the-box) to build semantic search or RAG pipelines quickly.

Use Cases & Differentiators: Pinecone is ideal when you need a production-ready vector search at scale without wanting to manage infrastructure. Teams with strict uptime requirements or large-scale customer-facing applications often choose Pinecone. It shines in use cases like semantic search in SaaS applications, personalized recommendations for millions of users, or AI assistants that require snappy responses backed by large knowledge bases.

Because it’s managed, even small teams can deploy enterprise-grade vector search. However, if your budget is tight or you require on-prem deployment (e.g., for sensitive data environments), Pinecone may not be the best fit due to its cost and cloud-only nature.

.avif)

2.2: Weaviate

Open Source with a Semantic Knowledge Graph Twist

Weaviate is an open-source vector database with a schema-based approach and extensive API options, combining a vector search engine + a knowledge graph.

With Weaviate, you define a schema (classes with properties) for your data, and you can store objects along with their vector embeddings. This allows for hybrid queries that mix semantic vector search with traditional filters or keyword search on object properties.

Weaviate exposes a GraphQL API for queries, making it intuitive to retrieve not just similar vectors but also traverse relationships (if your data is interlinked as a graph). This can be powerful for complex applications like enterprise knowledge bases, where you want to combine unstructured semantic similarity with structured filters or where data has inherent relationships.

Under the hood, Weaviate uses the popular HNSW algorithm for approximate nearest neighbor search and can integrate with modules for text and image vectorization. Weaviate provides modules for models like OpenAI, Cohere, etc., so developers can vectorize data on the fly during import. It’s designed to scale to billions of objects, typically running as a distributed cluster.

Developers can self-host Weaviate (it’s open source, written in Go), or use the Weaviate Cloud Service (WCS), which is their managed offering. The managed service includes a serverless option that starts at around $25/month for small projects and scales up by usage. They even offer a hybrid SaaS where you can bring your own cloud (deploy in your VPC) under an enterprise plan.

Use Cases & Differentiators: Weaviate excels when your application leverages the combination of semantic search and structured data, or when you require a flexible schema.

For example, if a developer is building a knowledge graph or a semantic product catalog, Weaviate allows you to store rich object data (with types and properties) alongside vectors, and query both by vector similarity and by fields (e.g., find documents similar to X that are tagged as finance). Weaviate’s GraphQL query interface is very powerful for such needs.

Weaviate also supports multi-modal data (you can vectorize text, images, etc., and search within a single database), which is great for use cases like searching both text and images in one query.

The trade-off is that Weaviate’s learning curve can be a bit steeper, a user needs to define schemas and learn its custom syntax. It’s also slightly heavier to run than some others, and tuning it for very high throughput scenarios may require expertise.

Weaviate is a strong choice for teams that value open-source flexibility and schema-rich features over out-of-the-box simplicity. It’s popular in enterprise knowledge management, academic research search engines, and any scenario where data relationships matter in addition to semantic similarity.

.avif)

2.3: Milvus (Zilliz)

Distributed Vector Database Built for Scale

Milvus is an open-source vector database purpose-built for massive scale and high-performance search. It originated in 2019 (now a graduated project of the LF AI & Data Foundation) and is backed by Zilliz, the company that offers a managed version.

Milvus’s design is cloud-native: it can run in a distributed mode across many nodes, scaling out to handle billions of vectors and very high query throughput. It supports advanced indexing techniques and multiple distance metrics, allowing you to choose the right index (HNSW, IVF, etc.) for your use case.

Notably, Milvus can leverage hardware like GPUs to accelerate vector search computations, a big pro for tasks like image similarity use cases where GPU acceleration drastically speeds up search.

In terms of features, Milvus offers much of what you’d expect from an enterprise database. It has built-in replication and high-availability, supports partitioning, and even has features like data snapshots and backups.

Milvus also allows hybrid search combining vectors with scalar filters (e.g., filter by a timestamp or category while doing similarity search). Because of its distributed nature, you can deploy Milvus on Kubernetes and scale it out similarly to how you’d scale a large data system.

Use Cases & Differentiators: Milvus is often the go-to when organizations anticipate very large collections or need strong guarantees in production.

If you are working with tens of millions or billions of vectors (a web-scale image similarity search or an AI system that indexes an entire data lake), Milvus is built to handle that kind of load efficiently.

Its ability to shard data and parallelize queries across nodes means it can maintain low latencies even as data grows. Milvus is also attractive for more technical teams that want fine-grained control (choosing index types, tuning search accuracy/speed trade-offs) and who have the engineering resources to manage a distributed system.

Common use cases include multimedia search (e.g., finding similar images or videos in huge catalogs), time-series or IoT anomaly detection on vectorized sensor data, or any AI application at enterprise scale that demands reliability and throughput.

The opportunity cost is that running Milvus can be more complex than lighter solutions. There’s more infrastructure to maintain (especially in distributed mode) and expertise needed to tune it properly.

If you’re a startup building a quick MVP, Milvus might be overkill, but for large-scale AI platforms, it’s a battle-tested option with a strong community and continuous development.

.avif)

2.4: Qdrant

High-Performance Open Source Vector DB with Filtering

Qdrant is another popular open-source vector database, distinguished by its strong performance (written in Rust) and excellent support for filtering and payloads.

Qdrant’s philosophy is to be a production-ready advanced search engine for vectors, offering a straightforward API (REST and gRPC) and the ability to attach arbitrary metadata (called payloads) to your vectors. This metadata can then be used to filter search results. For example, you might store an author:"Alice" field or a timestamp alongside each embedding and filter your similarity search by those fields.

Qdrant’s filtering is one of the best-in-class, which makes it very useful for applications where you need to combine vector search with structured conditions (e.g., “find similar documents in the finance category”).

Under the hood, Qdrant uses the HNSW algorithm for ANN search (like many others), but the Rust implementation offers speed and memory efficiency. It also supports distributed deployment. Recent versions allow sharding across multiple nodes for scalability, and it ensures data consistency with ACID-compliant operations for reliability.

Qdrant provides official client libraries, developer documentation, and a free Cloud service. You can spin up a managed Qdrant instance (with a generous 1GB free tier) on their cloud, or self-host it via Docker easily.

Use Cases & Differentiators: Qdrant is a great choice for developers who need a balance of high performance, flexibility, and cost-effectiveness.

Thanks to Rust and efficient design, Qdrant can handle large datasets and high query rates on modest hardware (often yielding lower latencies). It’s especially well-suited for applications where vector search needs to be combined with complex filters (think of e-commerce search where you filter by product attributes and vector similarity of descriptions, or a recommendation system where you only want results for a certain user segment).

Qdrant’s ability for real-time updates and focus on being “production-ready” (e.g., you can do consistent updates/deletes) means it’s used in scenarios like fraud detection (finding similar fraudulent patterns with filters by date/region), and content recommendation feeds that personalize on the fly.

One differentiator is Qdrant’s simplicity in integration. It doesn’t impose a new query language (you use a JSON-based query with vector + filters), and fits nicely into microservice architectures with its REST/gRPC endpoints. This, plus the open-source nature, has led to a growing community around Qdrant.

If we compare it, Qdrant might not have as many built-in AI modules as Weaviate or as many indexing options as Milvus, but for a vast number of use cases, it hits a sweet spot of speed + filtering + ease of use.

Qdrant is often mentioned as a solid alternative to Pinecone for those who want to avoid SaaS lock-in, or as an upgrade path for those who started with simpler solutions and need more performance.

.avif)

2.5: Chroma

The Developer-Friendly Embedding Database (Open Source)

Chroma (also known as ChromaDB) is an open-source vector database tailored for simplicity and a tight focus on AI use cases.

Chroma was built from the ground up to serve as a lightweight store for prompts, embeddings, and documents in applications like chatbots and assistants.

ChromaDB is written in Python (with some performant bits in other languages) and offers a very simple API for adding documents with embeddings and querying them back. Many developers like Chroma because it can run in-memory during development or be persisted to disk, and it works seamlessly inside a Python application (you can pip install chromadb and get started in minutes).

The key idea of Chroma is to make RAG dead simple to implement.

Chroma integrates nicely with frameworks like LangChain and LlamaIndex, and even without those, its API is straightforward. Developers don’t need to set up a server or cluster if they don’t want to. Chroma can run as an embedded library inside your app (or you can run a separate Chroma server for a more production setup).

By default, Chroma handles embedding storage, similarity search, and can store associated metadata (e.g., text chunks associated with each vector). Under the hood, it uses techniques like HNSW for similarity search, but all that is abstracted away unless you want to tune it.

Use Cases & Differentiators: Chroma’s sweet spot lies in the rapid development and prototyping of AI applications.

If you’re building an MVP of an LLM-powered app (for example, a question-answering bot over your documents), Chroma lets you get up and running extremely quickly.

The API doesn’t require knowledge of database internals; you can treat it almost like a Python list or dict where you add your data and query it. This reduces friction for developers who are more AI/ML-oriented and don’t want to become database admins.

Chroma is also lightweight in the sense that it doesn’t force you to run heavy services. For a lot of use cases, running it on a single machine or within your existing app is sufficient (it’s efficient enough for moderate scale, and if needed, you can scale it vertically or sharded manually).

However, Chroma is not (yet) designed for massive scale or complex deployment topologies. It lacks some enterprise features (e.g., user authentication, sharding across many nodes, advanced indexing choices) and its performance on very large datasets (tens of millions of vectors) may lag behind Rust or C++ based systems.

This is why we often see Chroma used in smaller-scale production or internal tools, as well as for teaching/research purposes. Many teams start with Chroma for the development speed, and if they hit performance limits, they consider migrating to something like Pinecone or Qdrant. This “start simple, then scale out” approach is common.

Chroma is purpose-built for RAG and LLM apps, making it one of the easiest to integrate for those scenarios. If your priority is to get an AI feature working now (and your scale is in the realm of, say, thousands to a few million embeddings), Chroma could be a perfect fit.

.avif)

2.6: PgVector

Using PostgreSQL as a Vector Database

Last but not least, we have pgvector, which is not a standalone database but an extension that turns the popular PostgreSQL relational database into a capable vector database.

This approach is compelling because it leverages the strength and familiarity of Postgres (a tool that many teams already use) to store and query vectors alongside traditional data. By installing the pgvector extension, you can add a column of type vector (for example, a 1536-dimensional vector to match OpenAI’s embeddings) to any table, and then create an index on that column for fast nearest-neighbor search.

Effectively, Postgres + pgvector gives you hybrid capabilities: you can write SQL queries that combine regular filters (e.g. WHERE category = 'sports') with a vector similarity search condition (ORDER BY embedding <-> $query_vector LIMIT 5), all in one database.

Pgvector supports several distance metrics (cosine similarity, inner product, Euclidean) and offers two types of indexes: IVF (IVFFlat) and HNSW, which are popular ANN algorithms. This means you can choose an index based on your needs (HNSW for better query performance at the cost of more memory, or IVF for lower memory usage).

Importantly, because it’s Postgres, you get transactional updates, persistence, and all the ecosystem benefits (backup tools, SQL clients, etc.) essentially for free. Many cloud database providers (like AWS RDS, Timescale, Supabase) support pgvector now, so you can often enable it with a configuration change and not have to run any new infrastructure.

Use Cases & When to (Not) Use: The appeal of pgvector is mainly simplicity and integration.

If you already have your application data in Postgres, adding vector search right there can drastically simplify your architecture. There’s no need to spin up and maintain a separate vector DB, handle data syncing between systems, or learn a new query API. You use standard SQL and get results, combining vectors and relational data as needed.

For many small-to medium-scale applications, this works like a charm. For datasets on the order of a few thousand to a few hundred thousand vectors, Postgres with pgvector can provide adequate performance (especially with HNSW indexes) while keeping operations minimal. Pgvector can be successful at scale, handling millions (and in some cases tens of millions) of vectors in production. However, performance varies based on hardware and index tuning.

When might you outgrow Postgres? The general guidance is that if your vector search workload becomes very large or very latency-sensitive, a specialized vector DB will outperform a general-purpose database. Vector search is computationally heavy, and Postgres wasn’t originally built for that kind of workload.

Pgvector indexes reside in Postgres shared memory and have to coexist with other Postgres operations. Beyond a certain point (dataset size or query rate), you might find query times rising or resource usage spiking. Also, Postgres doesn’t (as of now) distribute a single table’s data across multiple servers for scaling out, so you’re limited to scaling up on one machine or adding read replicas. Dedicated vector DBs like Milvus or Pinecone are built to shard data and utilize multiple nodes efficiently for search.

Additionally, specialized features like fast dynamic indexing, hybrid search with advanced ranking, or GPU acceleration are outside Postgres’s scope. So teams often start with pgvector for convenience and then “graduate” to a dedicated vector database if needed for performance or scale.

Postgres with pgvector is already a capable vector database for many scenarios. It especially makes sense when you want to avoid infrastructure sprawl and keep everything in one system, or when your vector search is more of a complement to a larger app (rather than the core of a large-scale semantic search engine).

Pgvector lowers the barrier to entry for adding semantic search. Any team familiar with SQL can start performing similarity queries without needing to learn new tools. Just be mindful of its limits. If you need sub-10ms searches over billions of vectors, Postgres likely won’t meet the requirement. But for countless applications at a moderate scale, pgvector offers a pragmatic and cost-efficient path to add AI capabilities.

.avif)

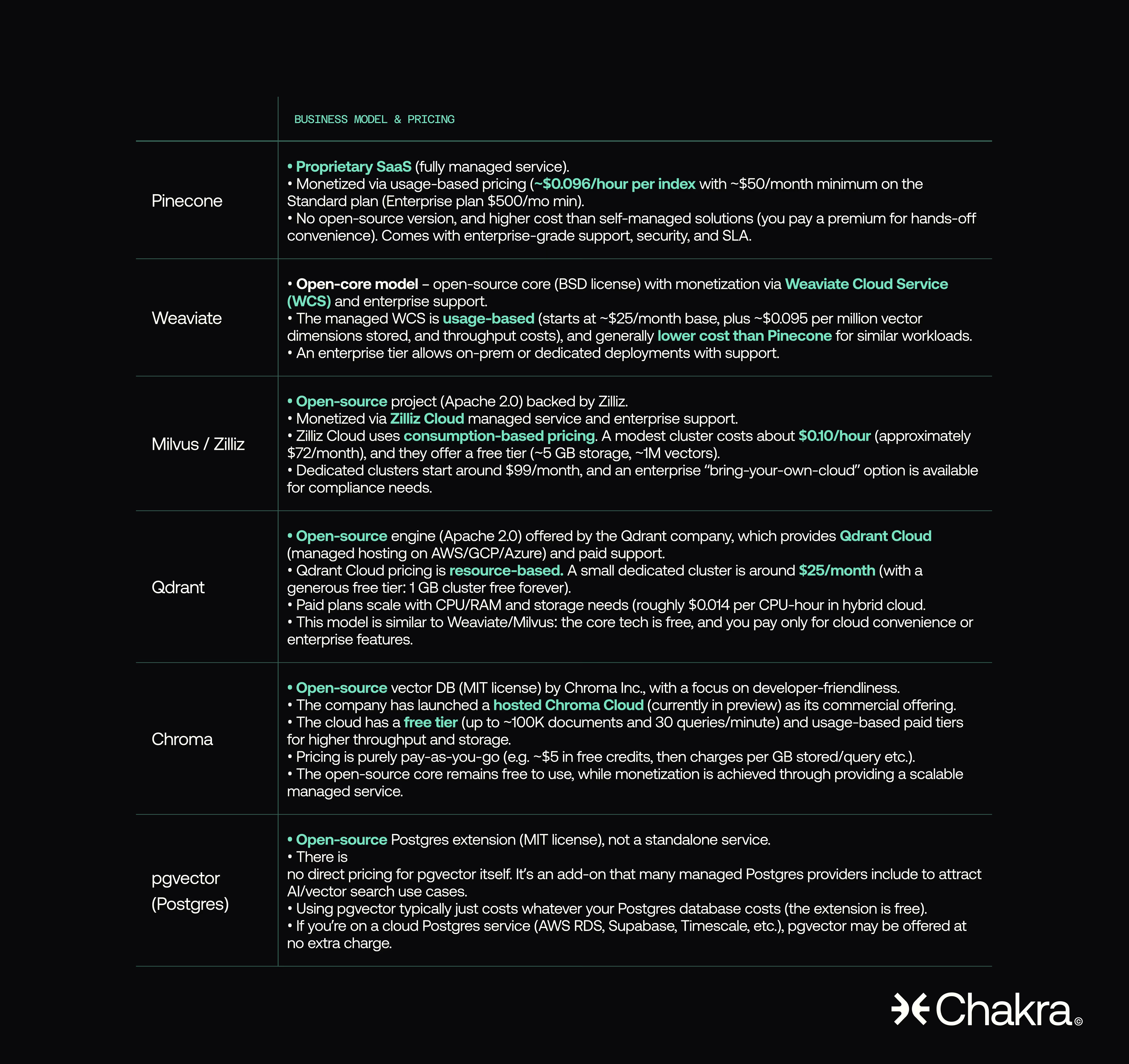

3. Business Models & Pricing

Each solution balances cost vs convenience differently. Proprietary services like Pinecone charge a premium for a turn-key experience and support, whereas open-source options (Weaviate, Milvus, Qdrant, Chroma, pgvector) let you avoid license fees and vendor lock-in by self-hosting, with paid options only when you need managed infrastructure or support.

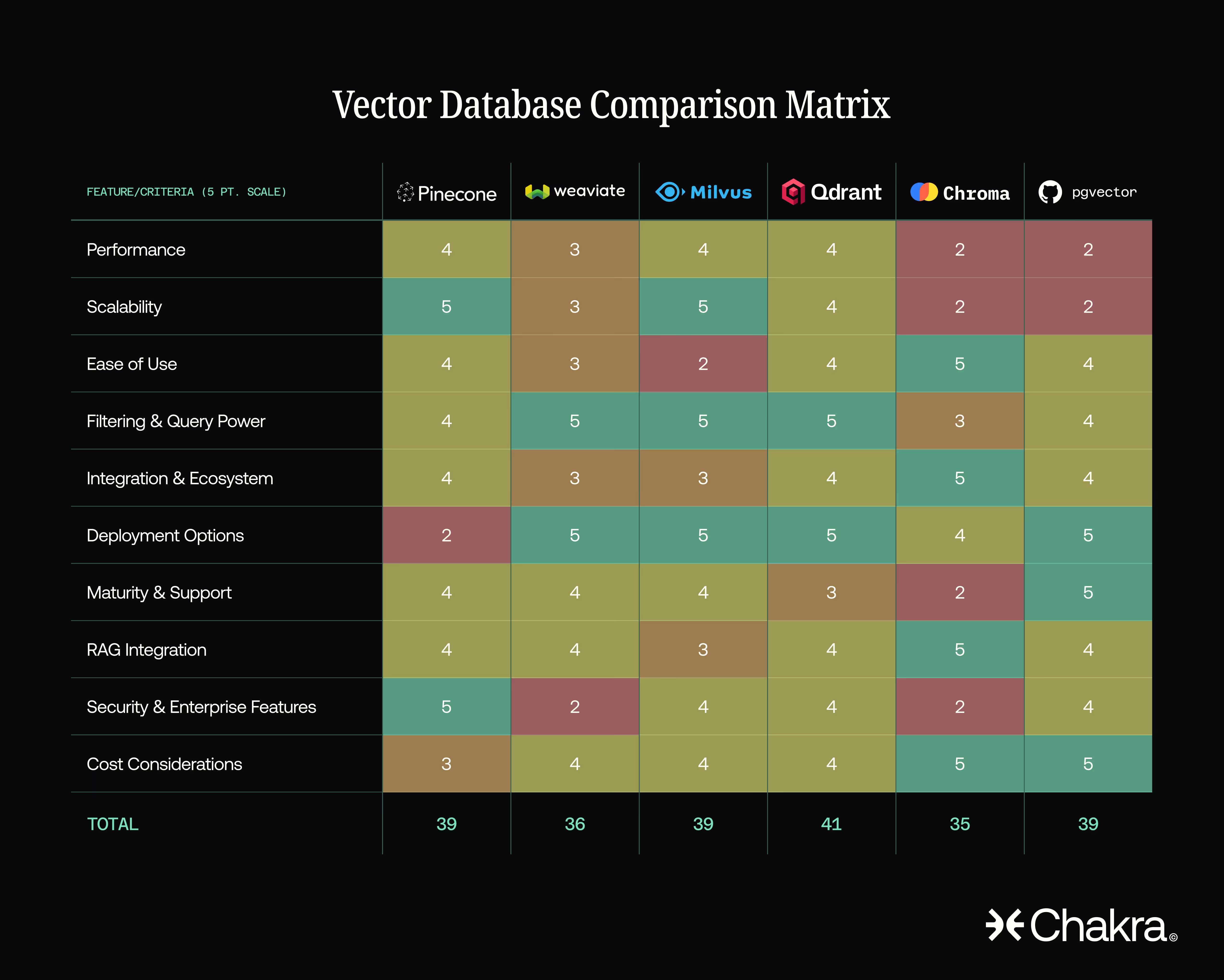

4. Key Differentiators & Comparison

There is no one-size-fits-all “best” vector DB.

Each has design decisions that favor certain scenarios.

The comparison matrix below provides a high-level glance at how some of these stack up across features (on an. estimated 5 pt. scale of relative strengths):

These are general trends. Actual results will depend on your specific dataset and workload.

It’s always a good idea to benchmark yourself when possible.

5. How to Choose the Right Vector Database

For developers and product teams, the decision often boils down to matching your needs with the strengths of each solution. Here’s a quick guide:

If you want a plug-and-play hosted solution with minimal ops:

Consider Pinecone.

It’s hard to beat the ease of “here’s an API, it just works.” Pinecone is great if engineering time is more precious than cloud spend, or if you absolutely need a proven, fully-managed service for production. Just be prepared to pay for that convenience and ensure your data can be stored in a third-party cloud.

If you need an open-source solution or on-prem deployment:

Look at Weaviate, Milvus, or Qdrant.

All three allow self-hosting and have thriving open-source communities.

- Weaviate is ideal if your data has a rich schema or if you like GraphQL and knowledge graph capabilities. It’s used when combining semantic and symbolic data is key.

- Milvus is a top pick if you anticipate massive scale and want a system designed for distributed deployments. It might require more devops investment, but it pays off for very large applications.

- Qdrant is a strong general-purpose choice for those who want performance and filtering but still prefer open-source. It’s simpler to set up than Milvus and can scale to very large datasets too (its distributed mode is relatively new but promising). If filtering on metadata is a major requirement, Qdrant’s filtering is among the best.

If you are already using Postgres heavily:

Try pgvector first.

For teams with existing relational data, adding pgvector can be a quick win. You can keep all your data in one place and use SQL to query everything. This is perfect for small to mid-scale needs.

If you have a Postgres-backed web app and want to add a semantic search feature on user-generated content, pgvector lets you do that without spinning up new infrastructure.

Monitor performance as you scale; if you notice slow queries or heavy CPU usage as your embedding count grows into the millions, that might be the signal to consider offloading to a specialized vector DB. Plenty of applications never reach that point, and running a single database system has operational simplicity.

If you need complex SQL joins or transactions involving vector data and relational data together, pgvector is the only solution that does that within one DB (others would require combining results at the application layer).

If you’re building a quick prototype or MVP for an AI app:

Chroma is your friend.

Its developer experience is tailored for speed. You can get semantic search working in a few lines of code. It’s ideal for hackathons, demos, or early-stage products where you prioritize iterating on the idea over optimizing infrastructure.

Chroma also works well for personal or internal tools (a small-scale document Q&A system for your team) due to its simplicity. Just remember that for a production, user-facing product that grows, you might later need to either enhance Chroma’s deployment or switch to something else if the requirements outpace it. But that initial velocity it provides is often worth it.

If your queries need to combine semantic search with structured filters or knowledge graph-like relationships:

Weaviate is a top choice (open source or cloud).

Its ability to perform complex hybrid queries (vector + keyword) and enforce a schema on data can provide more relevant results in such scenarios. Similarly, if you’re representing data as a graph of entities and still want vector search on properties, Weaviate covers both aspects.

If real-time updates and high-throughput are critical:

You might lean towards Qdrant or Milvus.

Qdrant is designed for production with consistency and can handle frequent updates well (it even has segment-based updates to optimize those).

Milvus can ingest large streaming data (especially with its newer versions and if using their Flink connector, etc., making it suitable for real-time applications like updating an index of news articles on the fly.

Pinecone also does real-time indexing seamlessly, so it’s a candidate if managed is fine. Some vector databases might need periodic batch indexing or have slower ingestion when using certain index types (e.g. HNSW can be slower to insert many items unless configured well), so check the throughput if your use case is an ever-changing dataset.

If budget is a big constraint:

Favor the open-source/self-hosted route or pgvector.

You can run Weaviate, Milvus, and Qdrant on a single-node VM to start, which might be very cheap compared to a usage-based cloud service that charges by vector count or queries.

Chroma and pgvector are practically free aside from the machine costs. As an example, a single $20–$50 per month cloud instance running Qdrant or Milvus could handle millions of vectors, whereas a managed service might charge hundreds for the same scale (though it saves you maintenance).

If you already have a Postgres running under capacity, leveraging it via pgvector costs nothing extra and might delay or negate the need for a separate cluster. Just be mindful not to overburden your primary database if the vector workload grows.

Finally, remember that you can switch vector DBs as you scale up or change primary use cases. Because your data in a vector DB is mostly the embeddings (which you can regenerate if you have the original source data and model), the main cost of switching is operational (re-indexing and perhaps some application refactoring).

Some teams even use multiple: Chroma during development and Pinecone in production, or pgvector for one part of the product and Qdrant for another, where requirements differ. The ecosystem is converging in terms of features, so it often comes down to what fits with your current tech stack and your team’s expertise.

6. Future Outlook

We expect vector databases to continue improving on speed (leveraging hardware like GPUs, FPGAs), on contextual search capabilities (e.g., better hybrid search, supporting more complex queries combining vectors and text), and on ecosystem integration (more SDKs, better monitoring tools, etc.).

There’s likely to be development in data management around vectors: tools for keeping embeddings up-to-date when your source data changes, or for evaluating the quality of your vector search results (did your DB retrieve the “right” ground truth documents?). As enterprises adopt these developments, features around security, compliance, and governance will garner more attention.

In all scenarios, having a modular approach will serve teams well. You might choose one vector DB today and another tomorrow as the landscape shifts, but the overall architecture (an AI system that retrieves relevant knowledge, injects it into models, and produces answers or predictions) is here to stay.

Whether you choose a fully managed solution like Pinecone, an open-source powerhouse like Weaviate/Milvus/Qdrant, the developer-friendly Chroma, or stick with the trusty Postgres via pgvector, Chakra provides you with rich structured data wherever your vector database lives.

Bring whatever database you like.

We’re here to help you make it all work together.

.avif)