What is RAG?

Remember back in 2023 when you used ChatGPT before it had web search and asked about current events, only to get a generic response or a note about its knowledge cutoff?

Remember how frustrating that was?

Well, that’s the limitation RAG has solved. By retrieving fresh or domain-specific data in real time, RAG allows models to stay current and accurate without retraining.

Retrieval-Augmented Generation (RAG) is a technique for enhancing generative AI models by feeding them relevant external information within workflows.

Instead of relying solely on an LLM’s static training, a RAG system retrieves up-to-date or domain-specific data from an external source (like a document database or API) and augments the model’s input prompt with that data. The LLM then generates an answer grounded in this retrieved context.

By combining the LLM’s parametric knowledge (patterns learned in its weights) with non-parametric memory (information retrieved on demand), RAG systems deliver responses that are fluent, contextually accurate, and verifiable.

RAG was proposed in a 2020 research paper, predating the widespread adoption of mainstream LLMs.

Today, RAG is an industry-accepted standard for improving the outputs of LLMs.

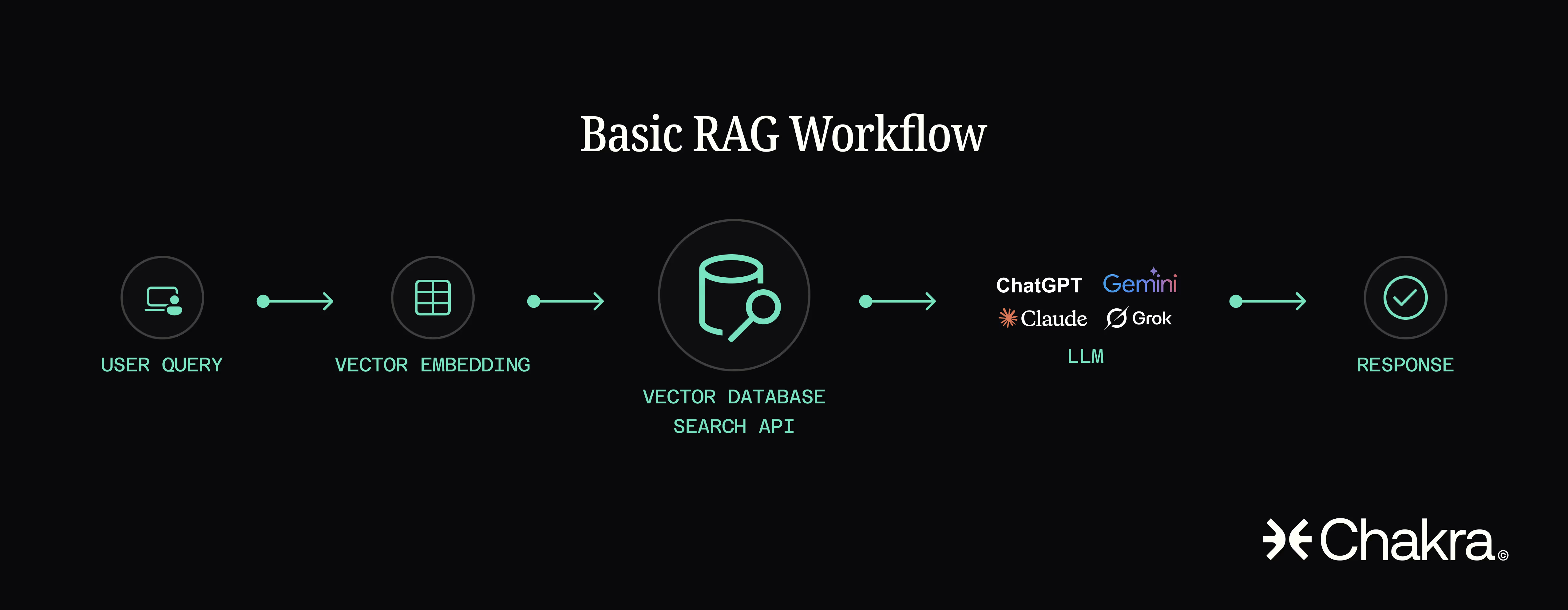

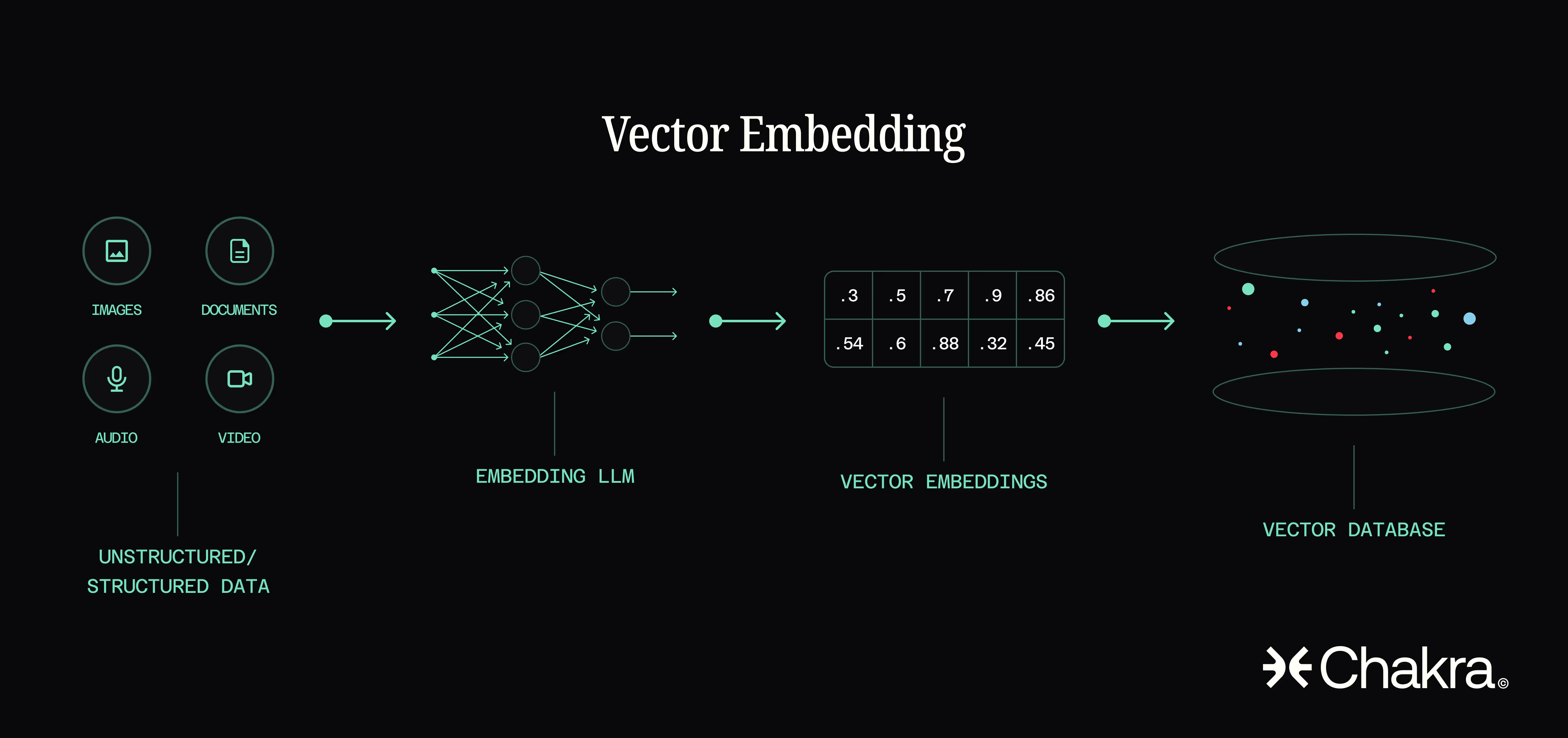

Implementing a basic RAG pipeline is relatively straightforward: a user query is converted into a vector embedding, which is then used to retrieve relevant documents from a vector database or search API. These documents (or context snippets) are prepended to the prompt sent to the LLM, enabling it to generate responses grounded in both the original question and the retrieved information.

This simple yet powerful workflow transforms generic LLMs into more context-aware systems capable of producing timely, accurate, and trustworthy outputs.

The RAG retrieval step happens at query time. Unlike fine-tuning or training new parameters, RAG doesn’t alter the model’s weights; it injects knowledge into the model’s input. This means you can update the knowledge base (e.g., add new documents or data) and the system will immediately use the new information in responses – no model retraining needed.

RAG is a more efficient way to keep a model informed and domain-aware, making AI outputs more accurate, trustworthy, and tailored to real-world needs.

Why RAG Matters

RAG addresses some of the most significant limitations of standalone LLMs. By grounding model outputs in external data, RAG brings a host of benefits:

- Improved Accuracy: LLMs often hallucinate, but RAG grounds responses in real data, like policy docs or databases, reducing falsehoods. With citations akin to research footnotes, users can verify claims and trust the output.

- Real-Time Information: Traditional LLMs can’t access recent info due to fixed training cutoffs. RAG solves this by fetching up-to-date data on demand, like pulling live stock prices (via a financial database API), keeping models current without costly re-training.

- Domain Customization: Generic LLMs fall short for domain-specific tasks. RAG enables customization by injecting proprietary knowledge at runtime, allowing a medical chatbot to access patient records or databases. It also supports access controls, ensuring users only see authorized info. Instead of retraining the model, you shape its output by curating what it retrieves.

- Consistency: RAG enhances AI behavior by grounding responses in a single, reliable knowledge base, rather than relying on scattered training data. This reduces contradictions, simplifies error correction, and gives developers visibility into which sources were used, making it easier to debug and improve outputs.

- Cost-Effectiveness: Training large models is costly and slow. RAG offers a faster, cheaper alternative by keeping knowledge external and retrieving it as needed. It’s simple to set up with open-source tools or managed services like Amazon Bedrock and Google Vertex AI. This makes accurate, tailored AI far more accessible.

- Dynamic Reasoning with Fewer Tokens: RAG effectively extends an LLM’s context window by fetching only the most relevant info, avoiding the need to cram entire knowledge bases into long, costly prompts. This keeps inputs concise, reduces latency, and delivers focused answers. RAG lets you inject 1000 pages of knowledge in 100 tokens or less by fetching just the right snippets.

RAG is essential because it enhances the reliability, relevance, and usability of AI. It bridges the gap between static AI models and the dynamic, data-rich world in which they need to operate.

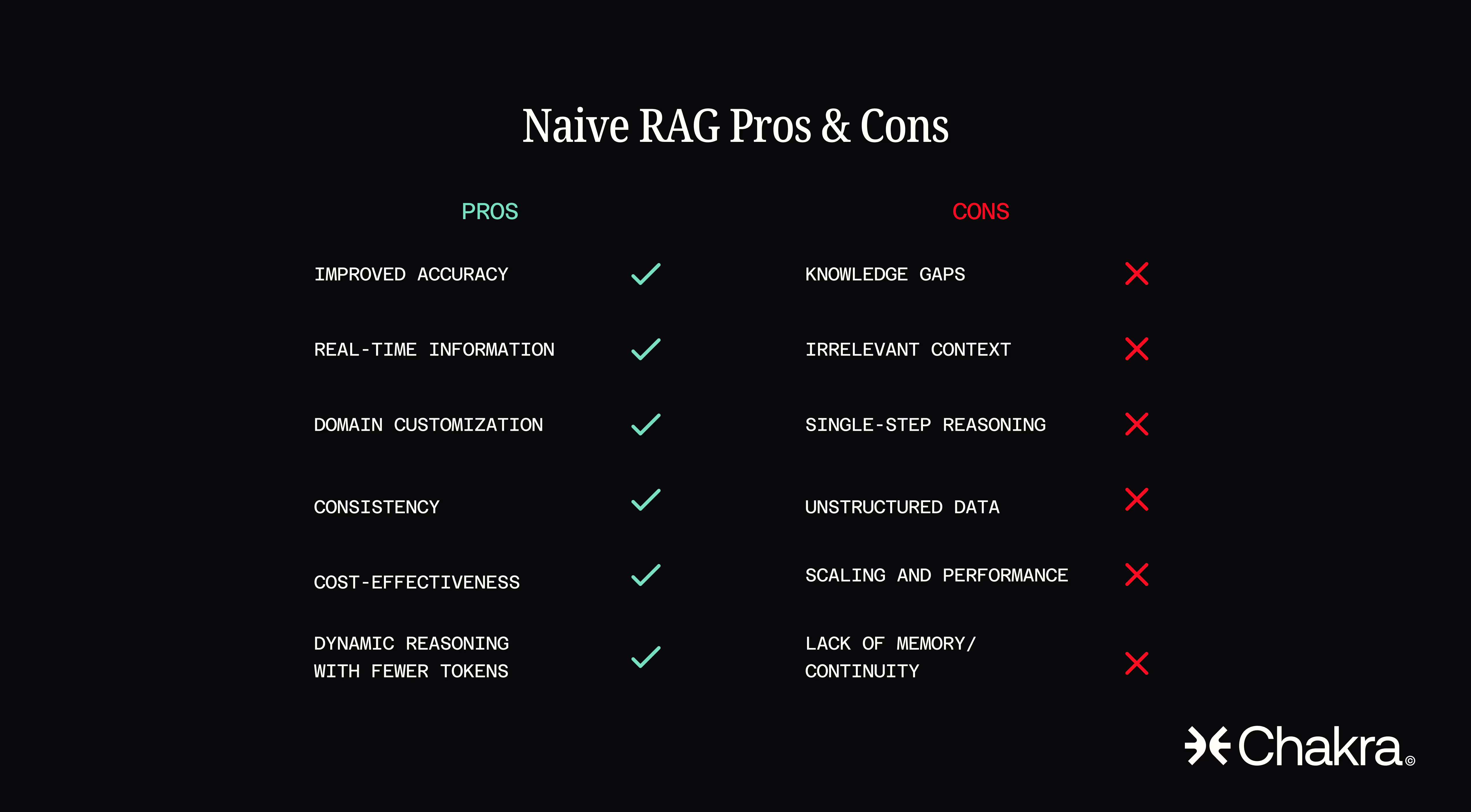

Naive RAG: The Basic Pipeline and Its Limits

The simplest RAG implementations, aka “naive RAG”, follow the straightforward recipe seen in Figure 1 earlier. This single-step retrieve-and-read pipeline works for many basic use cases, and naive RAG is popular because it’s easy to set up (especially with tools like LangChain or LlamaIndex that provide templates and often yield decent results with little tuning).

How naive RAG works:

- Query embedding (See figure below): Vector similarity is the core mechanism behind how naive RAG retrieves relevant information. The user’s query (or keywords from it) is converted into a numerical representation, called a vector embedding, that captures its meaning. The embedding is then matched against a vector database or search index of documents. Instead of relying on exact keyword matches, vector similarity identifies text that’s semantically relevant, even if the wording is different.

- Retrieval: The top-𝑘 most contextually useful chunks of text are then retrieved. This could also be a traditional keyword search or a combination of both, but in naive setups, it’s often pure vector similarity.

- Context augmentation: The retrieved text chunks are linked together (along with their source titles or URLs) into the prompt, typically before the user’s query or in a fixed template. For instance: “Using the information below, answer the question. Context: [retrieved text]. Question: [user query]”.

- LLM generation: The language model processes this augmented prompt and produces a final answer. The answer will incorporate the provided context (e.g., quoting a fact or using domain-specific terms from the retrieval) rather than solely relying on its internal knowledge.

This naive approach is effective for factoid questions or simple tasks. If the needed info is in the context, an LLM will usually incorporate it into the answer. Many production systems today use this pattern, even with small tweaks like a re-ranking step for better context selection.

However, naive RAG often struggles in the reality of unstructured real-world data and complex queries.

Developers who deploy naive RAG soon discover its limitations, especially at scale.

This is where naive RAG falls short:

- Knowledge Gaps: RAG is only as good as what it retrieves. If the right document isn’t surfaced (due to poor indexing, wording mismatches, or weak embeddings), the LLM lacks ground truth and may guess or give vague answers. Naive RAG offers no fallback when no relevant info is found.

- Irrelevant Context: Sometimes, retrieval returns text that looks relevant but lacks the needed answer. Naive RAG often blindly includes the top-𝑘 results (even if they’re redundant, irrelevant, or conflicting), forcing the model to sort through noise, which can lead to incorrect answers.

- Single-Step Reasoning: Basic RAG relies on a single retrieval step, which falls short for complex questions. It can miss key info if multiple sources or reasoning steps are needed, like comparing company and industry data or chaining facts. This limits naive RAG to simple, one-shot queries.

- Unstructured Data: In theory, RAG works with raw text, but real-world data is often unstructured. PDFs, slides, tables, and images often don’t extract cleanly, and naive setups that skip preprocessing can feed junk into the model. Without cleaning and structuring the data, RAG risks producing useless or misleading answers.

- Lack of Memory/Continuity: Naive RAG is typically stateless; it forgets past queries and context. Follow-up questions trigger fresh retrievals, often repeating work or missing connections, unless the user restates everything. Limited history hacks exist, but they still hit context window limits.

- Scaling and Performance: As a document/data collection grows, naive RAG can struggle. Simple vector search may slow down or return less relevant results at scale, and dumping too many tokens into prompts can drive up costs and latency. Without smart retrieval and structuring, naive RAG quickly hits its limits.

A basic RAG pipeline is a good starting point, but it's not the whole story.

When naive RAG meets unstructured data and complex user needs, it often struggles.

This has prompted the rise of more sophisticated “agentic” RAG approaches – essentially, RAG that’s smarter about how it retrieves, uses tools, and maintains context.

Agentic RAG & Tool Use: Beyond the Simple Search

Agentic RAG improves on naive RAG by making the LLM an active problem-solver. Like a research analyst, the LLM is able to break down complex queries, run multiple searches or API calls, and generate intermediate notes or calculations as needed.

The process becomes iterative: retrieve → reason → retrieve again → compose answer until the agent is confident it can answer the user.

Key features of agentic RAG:

- Planning and Multi-hop Retrieval: Agentic RAG enables multi-step retrieval, letting the LLM plan and gather information in stages. It might first search for A, then realize it needs B, and query again, repeating until it can answer. This handles complex, multi-source queries more effectively.

- Tool Use and Actions: Agentic RAG lets LLMs use external tools like web search, APIs, or code execution to go beyond static text. The model can fetch live data, run calculations, or query databases by outputting structured commands, enabling a loop of actions and responses until a final answer is reached.

- Interactive Memory / Scratchpads: Agentic RAG uses memory modules to store intermediate results, letting the agent recall key info across steps without overloading the prompt. This persistent context ensures the agent doesn’t forget what it’s learned mid-task, a crucial upgrade over stateless setups.

- Multiple Agents (Specialization): Some Agentic RAG setups use a team of specialized agents, such as a Researcher, Solver, and Responder, that work together to tackle complex tasks. This multi-agent RAG approach divides work like an assembly line, with a coordinator agent overseeing the process. It boosts performance on tasks too complex for a single model.

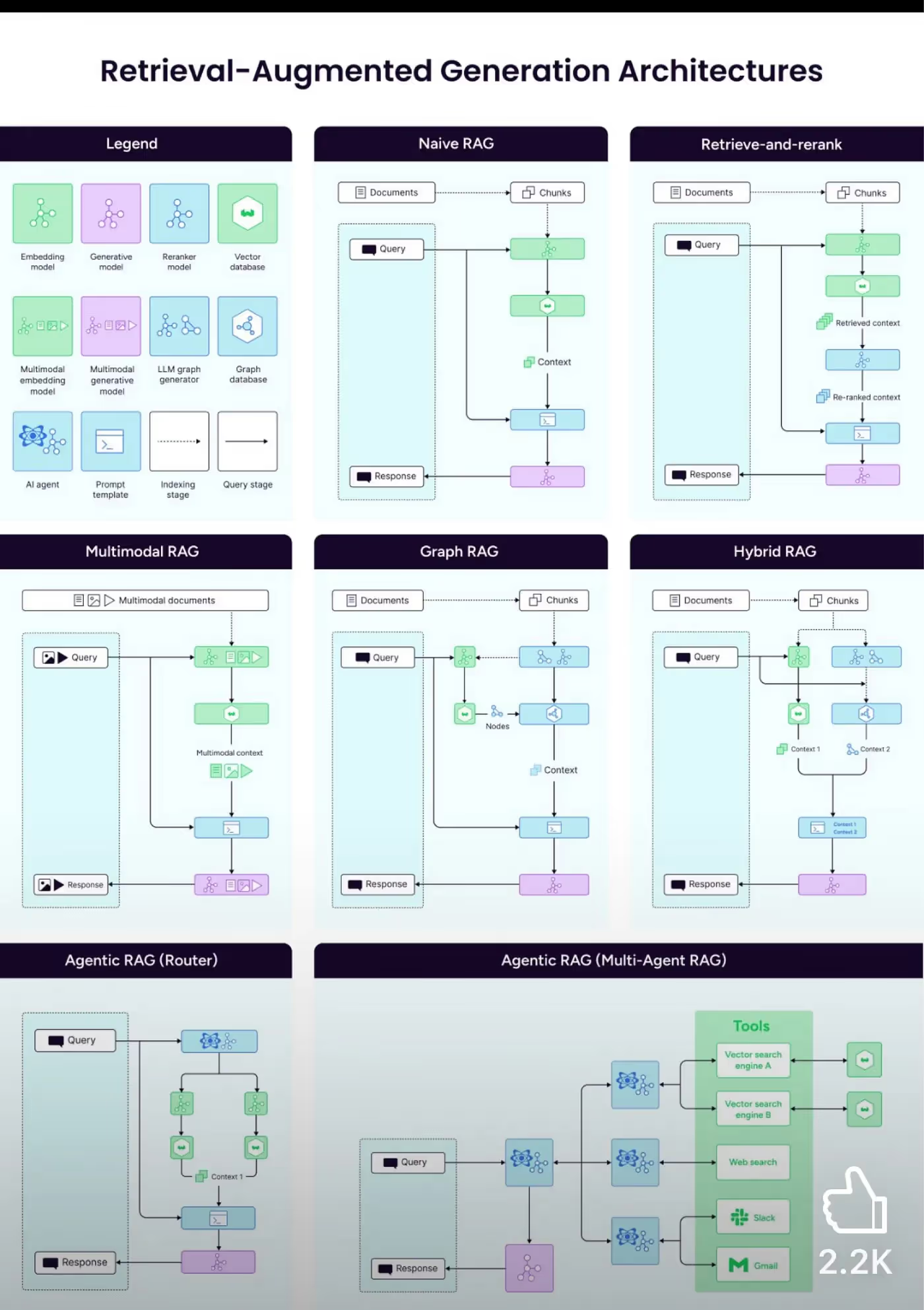

It’s worth noting that agentic RAG is a spectrum, not a single architecture, and we can view RAG as a design space with many possible configurations.

RAG ranges from the classic single-step RAG to hybrid agent-driven approaches.

One architecture uses a reranker model to double-check the relevance of retrieved data before it goes to the LLM, improving the quality of context.

Another uses a knowledge graph in addition to vector search, so that relationships between entities can be traversed. This is useful for complex queries that benefit from structured knowledge.

Yet another describes a single LLM agent deciding how to retrieve, essentially agentic RAG, with one agent planning its retrieval strategy.

And one of the most advanced architectures features multiple specialized agents collaborating, coordinated by a master agent to handle various aspects of the task.

RAG is not a one-size-fits-all technique, but a rich toolbox of strategies.

In some cases, a simpler RAG approach with a strong reranker might suffice; in others (especially open-ended or multi-step tasks), an agentic approach is needed.

We can think of this evolution as RAG 1.0 → RAG 2.0: RAG 1.0 was “plug in a vector DB and you’re done,” whereas RAG 2.0, Agentic RAG, is about robust retrieval + reasoning + verification.

Many production systems are starting to incorporate elements like hybrid search (combining vector similarity with keyword matching for better recall), multi-step workflows, and even post-generation answer checking (having a secondary model verify the answer against sources, a kind of automated fact-check before finalizing the answer).

Leading AI companies are actively exploring this space.

In 2023, OpenAI introduced tools and plugins for ChatGPT, enabling it to act more like an agent (e.g., browsing or running code), an early form of agentic RAG.

In 2024, Anthropic unveiled MCP (more below) for Claude to interface with data sources in a structured way.

Google’s Vertex AI platform supports building multi-step pipelines (and with their new Gemini model, they emphasize grounding and tool use as first-class features).

Even vector DB providers like Pinecone note that naive usage isn’t enough; models need two-stage retrieval, metadata filters, and iterative querying for the best results.

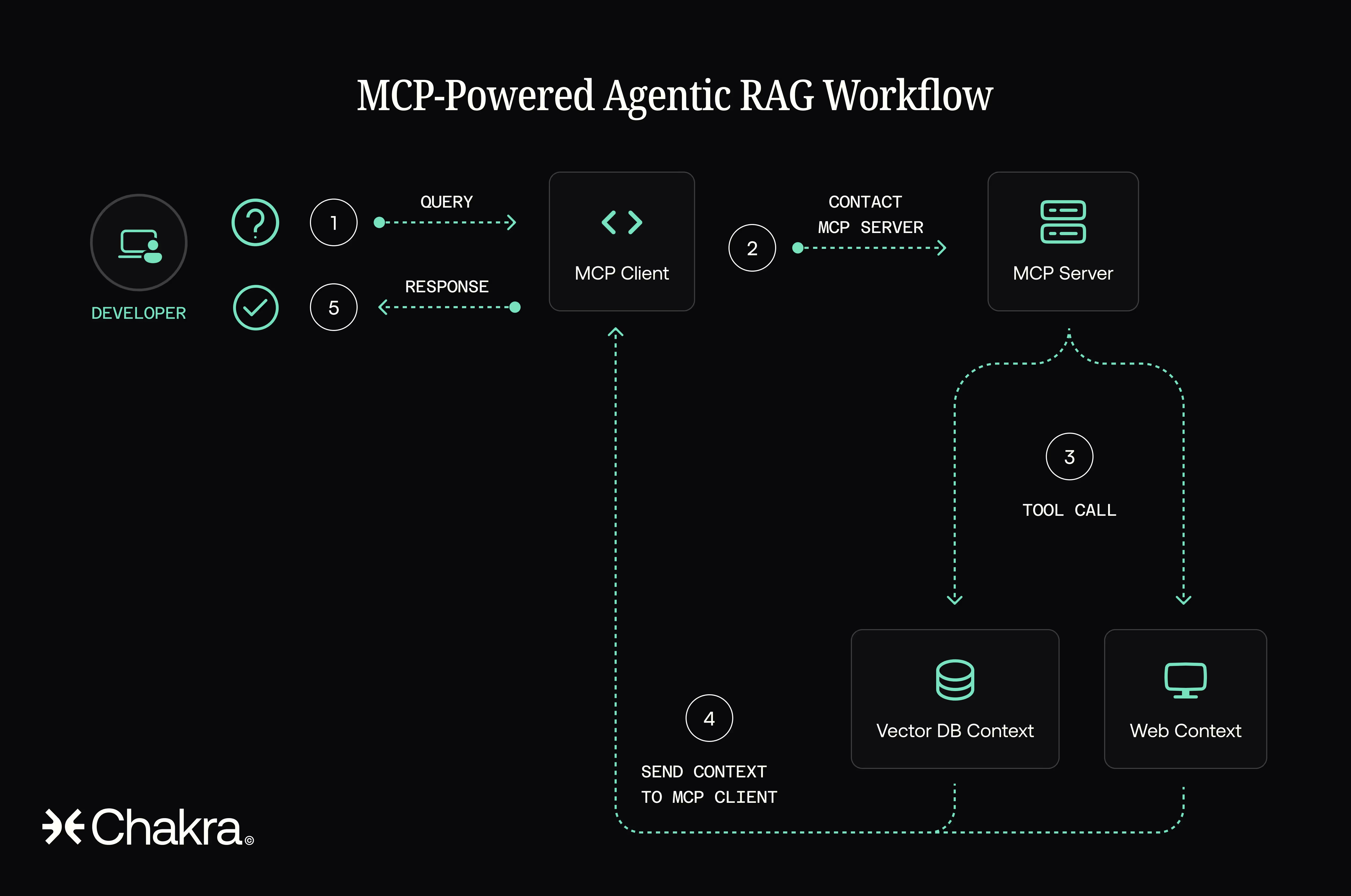

Agentic AI can now figure out what info it needs and how to get it, but unlocking this fully requires infrastructure for managing complex context. This is where MCP comes in.

Protocols like MCP: Enabling Context, Memory, and Agent Coordination

As agents take on more complex tasks like retrieval, tool use, and collaboration, managing context becomes a challenge. The Model Context Protocol (MCP) addresses this by standardizing how context is handled.

We’ve covered MCP and A2A at length in our primer on communication protocols, here. For a quick refresher, MCP is a standard protocol for AI memory and tool use, like a “USB-C port” for connecting agents to data, tools, or other agents. It uses a simple message-passing system (based on JSON-RPC) for discovering and calling capabilities.

An MCP server could be a vector database, a SQL database, a web search service, a calendar API, an email tool, or even a memory store. The key is that each server advertises a standardized interface (a schema of functions it can perform) and the agent communicates with it via structured messages, rather than scraping or bespoke code for each tool.

Why does MCP matter for RAG and agentic AI?

Because it provides the essential infrastructure for shared context and persistent memory across interactions, with five key benefits:

- Persistent Long-Term Memory: Traditional LLMs rely solely on the prompt for memory, which is limited and short-lived. MCP enables agents to connect to external memory (like vector databases or scratchpads), allowing them to store and retrieve information across steps or sessions. This enables agents to build and utilize context over time, making RAG more efficient and AI more truly contextual.

- Shared Context Across Tools: When agents use multiple tools in sequence, context often gets lost between calls. MCP fixes this by providing a shared context layer, so all tools stay in sync. For example, during event planning, calendar, email, and spreadsheet servers can access the same context, like an event ID, without manual handoffs. This keeps workflows smooth and coherent.

- Multi-Agent Collaboration: MCP enables multiple agents to collaborate by sharing context through a common interface, akin to a shared “blackboard” memory. Instead of clunky handoffs, agents can read and write to shared state, making coordination smoother and enabling scalable multi-agent systems.

- Tool Interoperability & Reuse: A key benefit of MCP is standardization. Before, AI tool integrations were custom and fragmented. MCP provides a common protocol, so tools only need to be built once to work across any MCP-compliant agent. This year, over 1,000 community-built MCP servers exist for tools like Slack, Git, CRMs, and vector databases. This modularity makes RAG systems more powerful, flexible, and easier to extend.

- Structured, Two-Way Interaction: MCP enables structured, stateful interactions between agents and tools. Instead of guessing at prompts, agents send JSON-RPC requests and get clean, schema-validated responses. This allows for reliable multi-step operations, like refining database queries or analyzing uploaded data, without the fragility of stateless text-based calls.

MCP supercharges RAG by enabling agents to retrieve data from diverse sources within a shared context, recall past results, and build complex pipelines without requiring custom glue code. The agent simply knows how to interact with any MCP-compatible tool.

Chakra showcases how MCP powers advanced RAG by seamlessly connecting LLMs to structured data and real-time streams. In a Chakra MCP workflow, an AI agent can discover a company’s data source, generate a SQL query, fetch results, and provide answers in real-time. MCP ensures this happens securely and with persistent context, enabling agents to reason over rich, live data without custom integrations.

Conclusion

The shift from naive RAG to agentic RAG marks a significant evolution in AI, from simple retrieval to context-aware agents that plan, reason, and utilize tools.

Naive RAG introduced grounding LLMs in external data but revealed the limits of one-shot pipelines.

Agentic RAG addresses those shortcomings by enabling multi-step reasoning, tool use, and collaboration across agents.

Protocols like MCP make contextual awareness possible by providing shared memory and seamless integration across tools and sessions.

At Chakra, we’re shaping RAG + MCP workflows, helping to build data systems where AI doesn’t just answer questions but learns, acts, and remembers.