1. What Are Computer Use Agents? Defining a New Breed of AI

Computer Use Agents (CUAs) are AI systems designed to operate computers the way humans do: by seeing the interface, reasoning through tasks, and taking actions via keyboard and mouse.

CUAs combine breakthroughs in multimodal understanding (vision + language) with sequential decision-making.

1.1 GUI + OCR

Most agents rely on transformer-based models that combine visual encoders with LLMs. They “see” the interface through a screenshot, interpret what’s on screen, and generate a sequence of actions (like clicks, typing, or scrolling) to follow instructions and complete tasks.

The typical workflow is iterative: the agent captures a screenshot, determines the next step toward its goal, executes it, and then re-evaluates by taking another screenshot. This perceive-think-act loop continues until the task is completed or the agent encounters an error, allowing the agent to carry out tasks on standard Graphical User Interfaces (GUIs) without specialized APIs.

Agents rely on Optical Character Recognition (OCR) to read text, object detection to identify buttons or fields, and an internal model of the task to plan multi-step sequences. OCR enables models to "read" what is displayed on the screen by decoding the text embedded in the visual input.

At a high level, an agent tasked with booking a flight will autonomously navigate to an airline’s website, fill out forms, select dates, and perform the same clicks and keystrokes a human would.

1.2 SOTA CUAs

Remarkably, these agents can already handle a variety of applications, from web browsers to simple desktop apps, and have achieved narrow superhuman performance on certain benchmarks.

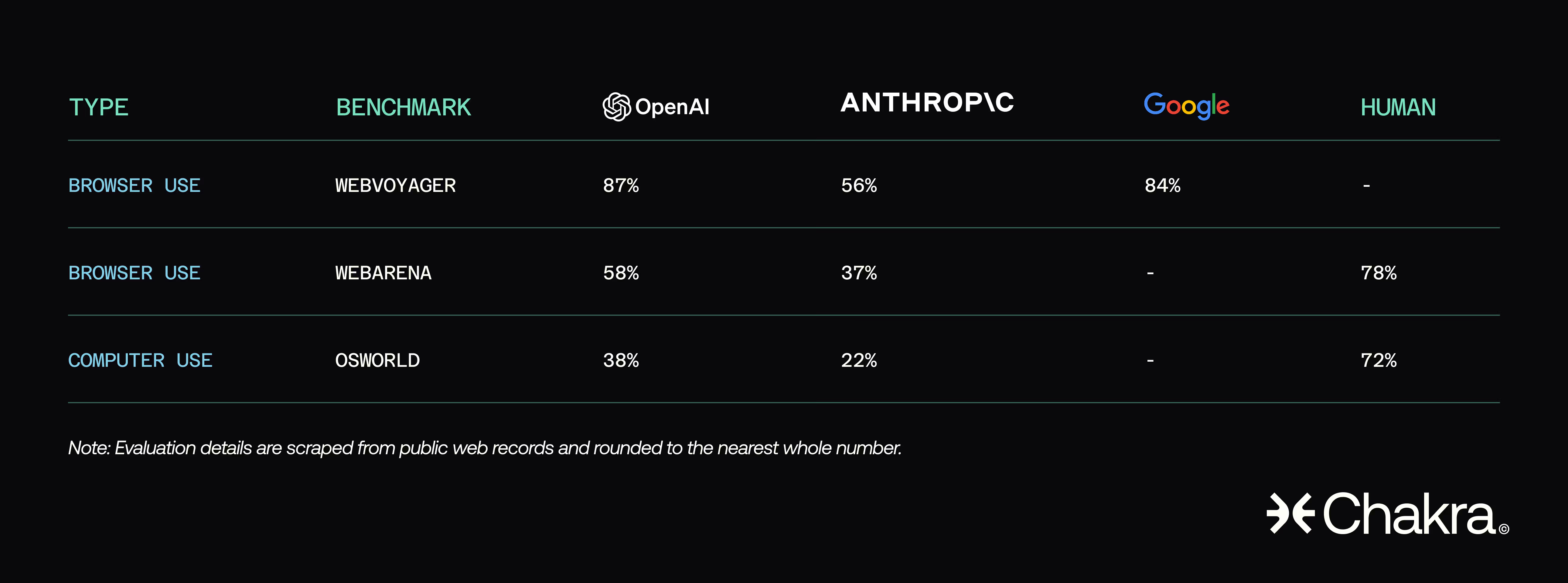

OpenAI reports that its latest CUA model, used in ChatGPT Operator, sets new records on web-based task suites like WebArena and WebVoyager. On WebVoyager (live website tasks), the top agent succeeds in ~87% of tasks, nearly matching a human’s success rate on those simpler navigation tasks.

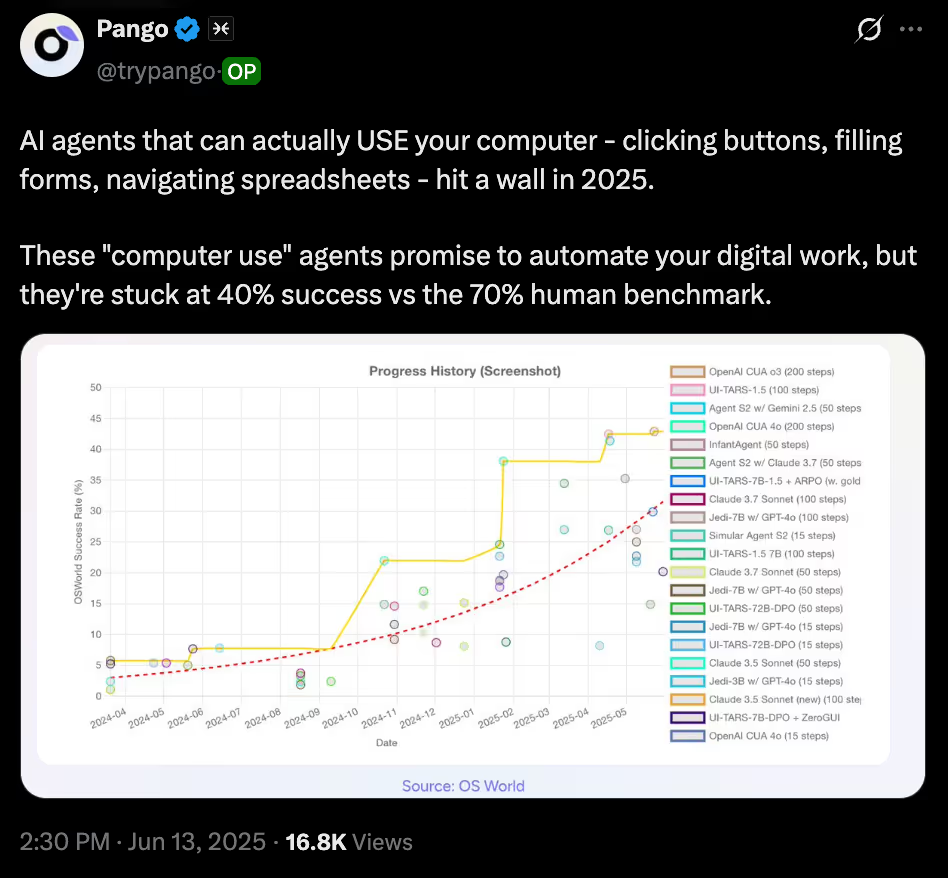

However, on more comprehensive benchmarks that span many apps and complex goals, AI still falls far short of human capability.

On the OSWorld benchmark (a suite of 369 realistic computer tasks), OpenAI’s Operator, the leading agent, achieves a 38% task success rate, compared to over 72% for human users.

One tradeoff worth noting: as CUAs take more steps to complete a task, their speed tends to suffer. Operator achieves strong performance, but it’s still noticeably slower than a human carrying out the same workflow.

Anthropics’ Claude-based agent was the previous leader on OSWorld at 22% success, and other models trail even further.

Today’s cutting-edge computer use agents are impressive but not yet generally reliable: they can excel at constrained web procedures, yet struggle with the breadth and unpredictability of real-world computer use.

Let’s change that.

Enter Pango.

The future of data used to train real-world CUAs.

Before diving into Pango’s core use case, let’s start by exploring the addressable market for computer use agents, the current “Operators”, datasets driving progress, training methods behind them, and key bottlenecks they still face.

2. Market Scale: The New Wave of Economic Potential

CUAs are aiming to become universal digital workers.

They can take on a wide range of knowledge work, including researching and compiling reports, filling out enterprise forms, managing inboxes and calendars, performing data entry and reconciliation, and providing customer support.

Human Computer Workflow = CUA Workflow

Already, parts of jobs are being automated in consulting, finance, marketing, sales, healthcare administration, and beyond. McKinsey researchers estimate that generative AI (which includes CUA) could add $2.6 to $4.4 trillion in economic value annually across business use cases by dramatically boosting productivity in knowledge work.

We know the market size for successful computer use agents will be enormous.

Every knowledge worker uses a computer, and augmenting each with an AI assistant could unlock trillions in value (the entire GDP of a large country) across the entire spectrum. Even partial automation of routine tasks could save billions in labor costs, driving intense investment into the space.

The race is on to build general-purpose “digital employees” that can operate across workflows.

Early winners, even among small startups, could see billions in enterprise value given the breadth of use cases.

Entire professions may evolve as agents handle repetitive GUI tasks, freeing humans for more creative or interpersonal work.

In the near term, agents will act as copilots: augmenting, not replacing, human workers. Current limitations keep us in the assistive phase, not full automation. But this is shaping up to be the next wave of robotic process automation (RPA), powered by smarter AI, capable of navigating unstructured software.

The incentives to solve this are massive.

3. The Current Landscape: Operator, Claude, Mariner & More

Let’s dive into the live products and prototypes leading this frontier. Several high-profile computer use agents have emerged over the past year:

3.1 OpenAI – ChatGPT “Operator”

In late 2024, OpenAI released Operator, an experimental agent integrated with ChatGPT. Powered by a custom GPT-4 vision model (GPT-4o, “o3” series), Operator runs in the cloud via a remote browser and currently leads many benchmarks thanks to its strong reasoning and web navigation capabilities.

Just describe what you want (e.g., “book me a round-trip flight next month”), and Operator will try to complete it by navigating websites and taking actions autonomously. Powered by a CUA model combining GPT-4 vision with action training, it can handle tasks like form-filling, online shopping, data scraping, and even posting on social media. It does these directly through the web UI, without APIs.

Each task in Operator runs in a fresh VM with a controllable browser, allowing multiple tasks to run in parallel, similar to several browser tabs. OpenAI is partnering with platforms like DoorDash, Instacart, and Shopify to optimize site-specific performance. Operator defers to the user for sensitive actions like logins or payments and is sandboxed to browser-only tasks. Despite these limits, it’s the most advanced public example of a computer use agent today.

3.2 Anthropic – Claude with Computer Use

Anthropic integrated computer-use capabilities directly into Claude, its conversational AI.

The “Computer Use” mode, built on a ~100B-parameter Claude 3.5/3.7 model, emphasizes safety and reliable multi-step reasoning, thanks to its Constitutional AI training. Released in October 2024, it's available via the Claude API.

When enabled, Claude can control a virtual desktop, interpreting screenshots and issuing low-level GUI commands, such as mouse movements and keystrokes. Trained on simple apps (e.g., calculator, text editor), it learned to generalize to others, including web browsers, despite minimal task-specific training.

Anthropic flipped the usual paradigm by adapting the model to existing human tools, not the other way around. Like Operator, Claude works in a screenshot–action loop and can perform basic tasks across most GUIs, though with current limitations.

Claude’s computer use is still somewhat slow and prone to errors. It struggles with drag-and-drop and dynamic content, since it processes screens as static images, missing things like pop-ups or animations.

Claude remains an early leader, and the computer-use feature is currently in public beta via API.

It includes safeguards, like rejecting risky actions and resisting prompt injection. While still supervised and limited, it serves as a strong early proof of concept with ongoing improvements.

3.3 Google – Project “Mariner” and Gemini API

Google’s Gemini-based agent, code-named Mariner, combines its native multimodal models with Chrome integration, enabling strong performance on live web tasks and seamless use of services like Maps and Gmail.

Google’s approach includes a developer-facing platform through the Gemini API, which offers “computer use” capabilities, as well as a consumer-oriented prototype called Mariner, designed to handle browser tasks from natural language prompts.

Mariner runs as a Chrome extension, limited to the active tab for safety, preventing it from interfering with background windows. It’s optimized for Google’s ecosystem, excelling at tasks involving Search, Maps, or Workspace apps. As of 2025, both Mariner and the Gemini agent API remain in limited preview.

Google is already embedding agent capabilities into products like Gmail and Android, enabling AI to draft emails or perform phone UI tasks. With its massive reach, these agents could eventually ship natively in Chrome or Android.

The key question is whether Google can match OpenAI’s core tech. Early results suggest Gemini is competitive, scoring ~84% on WebVoyager, and Mariner’s “world-model” planning may offer added reliability.

3.4 Others

Beyond these big three, startups and research labs are also in the mix.

Adept AI (whose ACT-1 demo in 2022 was an early precursor) is developing enterprise agents that plug into everyday software. Adept’s model “Fuyu” introduced a multimodal architecture for actions and garnered major funding in a $350M 2023 Series B (pre-product).

Emerging projects like Simular’s Agent S2 are experimenting with modular “mixtures of experts” for different UI contexts, reporting promising results on long-horizon tasks. Their CUA achieved ~34.5% on very long 50-step OSWorld tasks via specialized planning modules, which falls nearly in line with OpenAI’s Operator.

Microsoft is building Copilot Studio, which aims to bring agentic automation into the enterprise suite. It reportedly orchestrates multiple specialized agents (for Office apps, for developer tools, etc.) and focuses on enterprise requirements like data privacy (no data leaves the corporate tenant). Microsoft’s strategy is to weave agent capabilities into its Copilot products so that a business user can not only get AI-generated suggestions but also have the AI carry out tasks in Windows or Office automatically.

The open-source community has produced early agent frameworks (e.g., Auto-GPT & BabyAGI in 2023 showed autonomous goal-driven loops), but these were limited by lack of strong vision integration.

China’s tech companies (Baidu, Alibaba, etc.) are also entering this arena with their own prototypes for AI office assistants that can operate software, often as extensions of their large models.

China’s tech giants are aggressively entering the AI office‑assistant space. Baidu and Alibaba have launched their own agents, but ByteDance’s UI‑TARS stands out. It ranks #2 on the OSWorld benchmark (after Operator) using 100‑step tasks. UI‑TARS supports both browser and desktop automation, advancing the field alongside its large‑model extensions.

We can expect a proliferation of such agents in specific domains (finance, design, IT support, etc.), each tuned to the quirks of relevant software.

The current landscape is a mix of a few robust general agents in early access and many niche or experimental agents under development.

All these systems are being rolled out cautiously due to their novelty and potential risks; no one wants a rogue AI on their computer. Providers are implementing safeguards and collecting feedback, with broader releases anticipated as trust increases.

Competition is intense, with each new model (like GPT-4o, Claude 3.7, and Gemini v2) pushing performance forward. For users and companies, it’s an important period as agents shift from demos to real tools that can handle digital busywork once done by humans.

4. Data: The Fuel Driving Agents – Datasets and Ownership

Teaching an AI to use a computer requires examples of how humans use computers.

Over the past couple of years, the research community has built a trove of several key datasets to propel development of CUAs:

4.1 Mind2Web

One of the most influential datasets, Mind2Web, is a large collection of 2,350 web browsing tasks crowdsourced from 137 real websites across 31 domains. Each task is an open-ended instruction (e.g., “Find and follow Elon Musk’s Twitter profile” or “As a Verizon user, upgrade my iPhone plan”) along with a recorded sequence of human actions to complete it.

Crucially, Mind2Web utilizes real websites (not simplified simulations) and encompasses a diverse range of domains, including travel booking, social media, e-commerce, banking, and government sites, among others. This diversity tests an agent’s ability to generalize.

Mind2Web offers rich data for training and evaluation, including DOM snapshots, screenshots, and the exact actions taken by human demonstrators. It’s become a standard benchmark for CUAs.

However, agents still struggle to generalize. If trained on certain websites, their performance drops significantly when faced with new layouts. It’s often below 20% success, and more than 50% lower than familiar domains.

The takeaway is clear: agents need experience across diverse datasets for enterprise use.

.avif)

4.2 GUI-World

While Mind2Web focuses on websites, the GUI-World dataset encompasses a range of graphical interfaces, including desktop and mobile applications and even XR (extended reality) interfaces.

GUI-World contains over 12,000 recorded GUI interaction videos, paired with instructions and Q&A, spanning six scenarios and eight types of tasks. It includes tasks in desktop software (such as editing a document in Word), mobile apps, multi-app workflows, and dynamic content, including video players.

Uniquely, GUI-World used a Human-LLM collaboration to structure data: human annotators gathered video clips of GUI usage and provided captions, then GPT-4V was used to refine those descriptions and generate diverse question-answer pairs about the GUI state. All content was then verified by humans.

The result is a structured dataset that tests an agent’s ability to handle sequential, dynamic GUI content, not just static web pages. Evaluations on GUI-World have shown that many multimodal agents struggle, especially with dynamic elements (animations, changing content). Image-based models miss temporal info, and video-based models are still too early to fully understand GUI videos.

By covering things like desktop OS windows and cross-app interactions, GUI-World broadens the horizon beyond the browser.

Microsoft Research has used it to benchmark how well current agents can follow a multi-step software tutorial or handle a pop-up dialog. This dataset is paving the way for agents that are as comfortable automating Excel or Photoshop as they are automating websites.

.avif)

4.3 OSWorld

OSWorld is more than just a benchmark; it’s an entire emulator environment + task suite designed for testing multimodal agents on real computer tasks.

OSWorld provides a sandbox with operating systems (Linux, Windows, Mac) where agents can be evaluated on tasks like “schedule a meeting in Outlook, then send a confirmation email” or “rename a batch of files following a pattern”.

It contains 369 tasks with reliable, reproducible setup and evaluation scripts. Each task has an initial state (e.g., specific files present, certain apps open) and a success criteria script that checks if the agent accomplished the goal. This makes it a powerful research tool; one can measure exactly if an agent succeeded and even where it went wrong.

The OSWorld creators compared it to prior agent benchmarks (like MiniWob, WebArena, etc.) and showed OSWorld is more comprehensive and realistic.

We know from the dataset tests on OpenAI’s Operator that while a human can solve about 72% of the tasks, the SOTA AI agent’s success is only 38%. This represents a significant leap from the ~12% reported in the initial OSWorld paper, and that improvement resulted from the use of better models and training.

The stark human vs. CUA gap is an early reality check that even the “easy” looking tasks (like using a file explorer) are quite hard for agents. OSWorld is an open-source platform (developed by a team including HKU, Salesforce Research, CMU, etc.), which many researchers utilize to test improvements and share their results.

.avif)

4.4 Other Data Efforts

There are numerous other datasets and simulators: WebArena (by Meta AI) with offline copies of websites for controlled training, WebShop (tasks in an e-commerce setting), MiniWoB++ (a classic set of toy web tasks in a synthetic browser), AndroidWorld environments for mobile UI tasks, etc. Each serves as a training ground for specific skills.

There’s also the question of who owns the UI data: Websites and software UIs are public to use, but training an AI on all interactions with Gmail’s interface might raise platform policy questions. The current approach has been academic openness (Mind2Web is freely available, as is GUI-World, etc.) and partnership for sensitive data.

Some datasets like GAIA and WebGPT logs have been created by scraping or observing real usage, but privacy and consistency issues arise.

As a result, high-quality training data still comes from either crowdworkers performing scripted tasks or synthetic generation (having an AI pretend to be a user, to generate more data). We’ll discuss synthetic data shortly, as it’s a key part of how these agents are trained.

The dataset landscape is growing rapidly, with open academic contributions like Mind2Web and OSWorld laying the groundwork. New sets, like GUI-World, are adding depth, but coverage is still limited. Mind2Web’s 2,000 tasks feel large by benchmark standards, but it’s a drop in the ocean compared to the millions of unique tasks humans perform every day.

This is where new approaches, including crowdsourced platforms like Pango, aim to fill the gap by capturing a much larger volume and variety of usage data.

The more diverse and extensive the agent’s training data, the stronger and more multi-purpose we can expect its performance to be.

5. Training Computer Agents: Imitation, Reinforcement, and Synthesis

How do we turn raw interaction data into a working CUA?

The training process combines techniques from supervised learning and reinforcement learning in a hybrid pipeline to teach the model both the “how” and the “why” of using a computer:

5.1 Supervised Learning (Behavior Cloning)

First, the agent learns to imitate human demonstrations. Using datasets like Mind2Web or GUI-World, engineers train the model in a supervised manner: given an instruction and a screen state, predict the next action a human would take. This stage, often referred to as behavior cloning, is crucial for kickstarting the agent’s skills. By mimicking thousands of step-by-step examples, the model develops a basic understanding of UI affordances (knowing that a button labeled “Search” should probably be clicked, for instance).

Research indicates that pure imitation can help agents reach a certain level of competency, but they often plateau. They may overly replicate human trajectories, including mistakes or inefficient paths. Behavior cloning provides a strong foundation and can achieve reasonably good performance on seen tasks very sample-efficiently (with enough demonstrations, an agent can reasonably solve the same tasks in training).

Techniques like Dataset Aggregation (DAgger) can be used here, where the agent’s own mistakes are flagged and new demonstrations are added to correct them iteratively.

5.2 Reinforcement Learning (Fine-Tuning with Feedback)

After supervised learning, the agent is further trained with feedback on outcomes. At this stage, instead of following human actions exactly, the agent is allowed to try various actions and is rewarded for achieving its goals.

A common approach is using reinforcement learning (RL) with a reward model.

One can train a reward function that scores an agent’s trajectory, like assigning +1 for completing the task and 0 otherwise, or more nuanced scores for intermediate progress. The agent then practices in a simulated environment (or on tasks) and gets reward signals to improve its policy.

Proximal Policy Optimization (PPO) is a popular RL algorithm used in these agents, as it’s stable and works well with large models.

OpenAI, Anthropic, and others all report using RL fine-tuning to improve agents’ success rates beyond imitation alone. Hybrid approaches (imitation + RL) have shown 30–40% performance improvement over supervised learning alone on these tasks.

RL helps the agent learn when to deviate from demonstrations. Maybe the human took a suboptimal route, and the agent finds a shortcut that still completes the task (earning the same reward). It also teaches the agent to recover from errors: if a step doesn’t work as expected, an agent trained with trial-and-error is more likely to try alternative actions instead of getting stuck.

The combination of imitation (to learn basic competence) and RL (to refine and exceed human performance where possible) is now standard.

5.3 Synthetic Data Augmentation

As mentioned, one big challenge is the limited quantity of human-labeled demos. To address this, researchers have developed clever methods to generate synthetic training data, which can dramatically expand the agent’s experience.

One approach is using agents to generate new trajectories on their own.

The OS-Genesis project had an agent randomly explore an environment (like clicking around an Android interface and web browser) to collect tons of state-action pairs, which were then filtered and clustered into meaningful “tasks” after the fact.

Reversing the approach by first collecting large amounts of raw interaction data and then defining tasks has improved success rates on some benchmarks. This method captures scenarios that humans hadn’t explicitly demonstrated.

There’s evidence that synthetic data, when carefully generated, can match or even exceed the quality of real demonstrations. A May 2025 PC Agent-E training flow on Claude 3.7 Sonnet found that with only 312 human demonstrations as a seed, plus a large amount of synthetic augmentation, the CUA achieved 141% relative improvement over a baseline model. This effectively outperformed models trained on far more real data.

5.4 Fine-Tuning & Specialization

After broad training like methods listed above, there’s often an additional fine-tuning for specific contexts. OpenAI might fine-tune Operator on high-value websites by giving extra training data or doing RL with those sites.

Enterprise deployments involve fine-tuning on company-specific apps or using LoRA (Low-Rank Adaptation) or other parameter-efficient tuning to quickly specialize the agent to a new interface.

LoRA adapters have been used to adapt giant models with only a small computational cost, achieving ~95% of full fine-tuning performance using LoRA adapters at 1/10th the compute, which is very practical for iterating on these models.

Throughout training, evaluation is crucial. Teams continuously evaluate agents on held-out tasks (like a subset of Mind2Web tasks not seen in training) to see how well changes are improving actual performance.

Interestingly, training these agents is not extremely data-hungry by modern AI standards – we’re talking tens of thousands of trajectories, not billions. The limiting factor is more the quality and coverage of data than sheer volume. Each trajectory is complex (with many steps and rich visuals), so even a few thousand cover a lot of ground.

However, for the agents to truly generalize, they likely need an even broader training distribution, which is where crowdsourced real-world data generation (like Pango’s approach) could be transformative. With the current training regimes (imitation + RL + synthetic), we’ve seen rapid progress.

Maintaining that momentum will require feeding the models ever more varied and representative experiences.

6. Core Bottlenecks: Why Aren’t Agents at 100% (Yet)?

Despite the progress, today’s computer use agents have clear limitations that hold them back from achieving human-level performance.

Researchers have identified several core bottlenecks that need to be overcome:

- Planning and Reasoning: The hardest part isn’t seeing the screen, it’s planning what to do. Agents struggle with multi-step tasks, often looping or skipping steps they can’t reason through. Unlike humans, they lack strong internal planning. Techniques like tree search and task decomposition are promising, but reliable, goal-driven execution remains a key bottleneck.

- Long Contexts and Memory: Another challenge is managing the sheer volume of information on real interfaces. Webpages can exceed an agent’s context window, causing it to miss fields or forget earlier steps in multi-page workflows. Techniques like cropping or truncating help but aren’t foolproof. Without persistent memory, agents lose track of goals or overlook key details, especially in long or dense sessions. Solutions like extended context windows or external memory are in progress, but for now, limited recall remains a major bottleneck.

- Data Scarcity and Generalization: Agents perform well on familiar tasks but stumble on anything outside their training. A model trained on shopping flows might fail on a developer tool or spreadsheet. Even small UI changes can break a workflow, like a button moving after a site update. Fixing this means training on more diverse data. Pango aims to crowdsource real user workflows, while synthetic data helps introduce variation. Until agents see enough variety, even minor shifts will throw them off.

- Dynamic and Unpredictable Interfaces: Agents struggle with dynamic or visually complex interfaces. They operate in a stop-start loop (screenshot, act, repeat), so they can miss fleeting popups or changes between frames. Timing-based actions like hover menus or drag-and-drop are also tough, since most models aren’t trained for continuous gestures. Visual quirks, like low contrast or nonstandard layouts, can even trip them up too. And language is a challenge: agents trained mostly on English UIs may flounder on non-English ones. Fixing this will take better vision-language alignment and more globally diverse training data.

- Error Recovery and Adaptability: Agents still struggle with failure recovery. Unlike humans, they rarely have backup plans, if an action fails, they might freeze or repeat it blindly. In the real world, where errors are common, this rigidity is a major bottleneck. Current agents lack a high-level controller to adapt mid-task. Current research explores meta-planning or self-critiquing models, but these add complexity. For now, adaptability often depends on human oversight. Reducing that reliance is essential for fully autonomous agents.

- Safety and Trust: Safety remains a key operational bottleneck. Agents are sandboxed and monitored, which limits full autonomy. Operator, for instance, won’t enter passwords or complete purchases without user input. Tasks involving data deletion or emails often pause for confirmation. These guardrails are necessary but cap what agents can do alone. Developers err on the side of caution, trading capability for control. As reliability improves, some of these constraints may ease, but for now, human oversight is still required.

These bottlenecks define the research agenda for the next few years. Each is a non-trivial problem.

The encouraging news is that none of them look like brick walls; they are being actively addressed via larger models (for better reasoning), architectural tweaks (for longer context and memory), new training data (to cover more scenarios), and algorithmic innovations (for planning and safety).

The pace of progress is rapid. As these hurdles get cleared one by one, we can expect CUAs to go from novelty to dependable daily workhorses.

7. Introducing Pango as the Next Era of Data: Real Users in the Loop

To overcome the data scarcity and generalization bottleneck, a new approach is emerging: crowdsourced real-world data generation for training agents.

This is where Pango comes in.

Pango is a platform aimed at collecting massive amounts of high-quality, real user interaction data by paying everyday users to contribute their workflows.

Instead of relying on a limited number of crowdworkers performing scripted tasks, Pango scales up data collection by tapping into the countless tasks real people do on their computers daily.

Soon, users around the world will be able to opt in to Pango via a Chrome Extension, and computer use tasks can be logged (screen captures, action sequences) and sent as training examples.

In return, users get paid in USDC or gift cards for their data.

Pango specifically focuses on:

- Real users doing real tasks in Spreadsheets/Slides

- Error recovery patterns when things go wrong

- Multi-step workflows across applications

- And Diverse user behaviors and shortcuts

Over time, this will produce an unprecedented amount of real interactions.

Why is this important?

Because real user workflows are the gold standard of diversity and complexity.

If Pango can capture thousands of such multi-app workflows, agents can learn higher-level patterns. It also introduces more human-like behavior patterns (like checking one’s work or interleaving tasks). Synthetic data can simulate some of this, but it’s hard to get the nuances right.

Involving real users closes the loop between AI and the community.

It creates a virtuous cycle:

Users generate data → data makes agents smarter → smarter agents can help users more → more users engage and generate data.

This is analogous to how Google uses billions of search queries to refine its algorithms, or how Tesla uses drivers’ data to improve Autopilot.

Pango will do for computer use agents what those feedback loops did for search and self-driving.

And Pango only tracks activity users start. Users are always in control and can choose what/when they share.

Pango will allow users to structure their data by labeling their task (“this was me organizing my photos”), which gives rich context to the AI during training.

Pango is built as a subnet on Chakra, where contributors upload anonymized interaction traces and developers can access them as training data streams.

Why does this really matter?

Because it may be the key to reaching human-level performance. All the bottlenecks we discussed (planning, context, adaptability) could be mitigated by learning from vast experience.

Humans become expert computer users through years of varied practice; an AI agent may need the equivalent of that, which could be billions of logged actions across diverse scenarios.

Synthetic and academic datasets might get us to 50% of the way.

The remaining 50%, the long tail of weird tasks and real-world context, requires real data. Pango’s approach recognizes that and treats data generation itself as a scalable operation, not an afterthought.

Democratizing data has an inclusive effect. It’s not just Big Tech’s internal data – it’s an open pool that startups, researchers, and new entrants can use to compete. This accelerates innovation without data oligopolies.

With Pango, we hope to see a dramatic enrichment of training resources this year and beyond, fueling a new wave of much more CUAs.

8. Conclusion: The Road Ahead for Computer Use Agents

Computer use agents are rapidly moving from demos to real tools.

As training data grows and technical challenges are solved, these agents will get faster, smarter, and more reliable, especially in narrow domains where they may soon outperform humans.

Pango will accelerate this shift, feeding agents real-world usage and enabling smaller teams to build strong models. With the right data loop, agents can improve continuously.

Architectural advances, like memory-augmented models or hybrid planners, will help tackle reasoning and context gaps. And foundation models like ChatGPT, Claude, and Gemini will likely serve as the backbone, adapted for UI tasks.

In the long run, we will see a shift in Computer-Use Agents.

Natural language becomes the interface, and agents handle execution.

Just as GUIs replaced the command line, AI agents may redefine how we interact with software.

Together, let’s turn tools into teammates.