1. Introduction: From Model Access to Model Ownership

In the early days of AI, access to powerful models was a competitive edge. From roughly 2012 to 2023, breakthrough systems like OpenAI’s GPT-3 and Google DeepMind’s BERT lived behind API gateways and corporate firewalls. If you wanted their intelligence, you rented it as a service.

The “AI-as-a-service” approach meant that while developers could use state-of-the-art models, they didn’t own them in any meaningful sense. The core model weights, the training data, and the infrastructure all remain in the hands of a few select tech giants.

Today, we stand at the beginning of a new era in AI: from model access to true model ownership. There is a growing movement to not only open-source model weights but to empower communities to train and improve models collectively.

We’re entering the third epoch of AI, one defined by decentralized training and community-owned model development.

This shift didn’t happen overnight. Over the past 12 years, the open-source AI community has cracked open the black box of proprietary models.

We're witnessing a notable shift from reliance on closed APIs (such as OpenAI, Claude, Perplexity, and Grok) toward open-source LLMs (like Mistral, LLaMA, DeepSeek, and Falcon), driven by our increasing demand for greater control, customization, and transparency over AI models.

In February 2023, Meta set this shift in motion when they publicly released LLaMA’s model weights, marking the first time a copy of a model’s weights (the ownership of the model itself) had become accessible.

Yet, even as open models put state-of-the-art capabilities into the hands of the masses, a sobering reality remains: the training of such models is still primarily controlled by the same few powerhouse labs.

We gained the ability to use and fine-tune advanced models, but creating the next GPT-4 from scratch? That was—and largely still is—beyond the reach of any community. The infrastructure and cost requirements form a steep wall.

New developments are poised to tear down that wall.

Recent breakthroughs in distributed optimization techniques from teams like Prime Intellect and Nous Research, novel SWARM parallelism algorithms from Pluralis Research and Gensyn, and token-incentivized coordination are converging to enable something revolutionary—large-scale AI model training that does not rely on a single entity’s centralized infrastructure.

The central thesis of the third epoch of AI is simple. By decentralizing the training stack, we can turn the development of frontier AI from monopolistic control to a shared collective.

In a future where millions of people can contribute their spare GPU cycles, training a cutting-edge AI model becomes a collective act of creation. But coordination at that scale doesn’t happen by accident. It requires an incentive layer that can operate across borders, devices, and trust boundaries.

This is where crypto shines: as a programmable economic layer that turns raw compute into a tradable, verifiable, and rewardable resource.

Crypto doesn’t just enable participation—it aligns it. By using tokens, smart contracts, and decentralized protocols, contributors can be fairly compensated for their work, even if they’re anonymous and geographically dispersed.

The result? A permissionless training network where anyone can go from passive consumer to active owner of the models they help bring to life.

So why hasn’t this happened already?Why are we still relying on models built in someone else’s stack, under someone else’s terms?

To answer that, we need to examine the overlooked bottleneck at the heart of AI: centralized infrastructure.

2. Centralized AI: Historical Bottlenecks

While headlines focus on bigger models and faster breakthroughs, the real constraint on AI isn’t parameters, it’s infrastructure.

Training today’s most advanced models requires enormous resources: tens of thousands of high-end GPUs, massive energy consumption, and tightly engineered compute clusters.

This scale of investment has concentrated power in the hands of a few: OpenAI (via Microsoft Azure), Anthropic (via AWS and Google Cloud), Google DeepMind, Meta, and NVIDIA (which not only dominates the GPU supply but dictates the pace and direction of AI development).

In this landscape, it's not enough to have brilliant researchers or clever algorithms. What matters most is who controls the compute. Without $100 million to spend on a supercomputer-grade GPU cluster, training a frontier-scale model from scratch is out of reach. Compute isn’t just a tool. It’s a gatekeeper.

And in 2024, the gate got taller. Tech giants poured over $215 billion into AI-related infrastructure, locking in their lead with armies of GPUs.

Elon Musk’s xAI launched "Colossus," a supercomputer with 200,000 H100 GPUs, dedicated to training Grok 3. OpenAI reportedly spent over $100 million just on GPT-4, with GPT-5 facing delays from even greater complexity and cost.

This is not a level playing field. It’s an arms race. And the result is a compute cartel.

However, outside these elite clusters lies a vast, overlooked resource: millions of idle GPUs scattered worldwide in gaming PCs, research labs, and cloud instances. Together, they represent a latent global supercomputer. The challenge? Coordinating them.

That’s where crypto-powered compute protocols step in.

Platforms like io.net and Akash emerged to offer permissionless access to GPUs, offering substantial cost savings and scalability advantages over traditional centralized data centers.

Now, these protocols are being leveraged in the training of Prime’s INTELLECT-1, a 10-billion-parameter AI model and the first of its kind to be trained fully on decentralized infrastructure.

The opportunity point is clear: with the right incentives and coordination layers, we can turn this global patchwork of idle hardware into a cohesive training network.

Decentralized training is about breaking open the infrastructure bottleneck so that innovation can flow from many directions, not just top-down.

To this day, major LLM training has remained almost entirely centralized, and there is a technical wall that has separated distributed idle GPUs from cutting-edge model training happening in elite data centers.

To understand that wall, we need to look under the hood of how model training actually works, and why it was never designed for a decentralized world in the first place.

3. The Technical Wall: Why AI Training Was Never Meant to Be Decentralized

Today’s AI training workflows are inherently built for centralized supercomputers. Large-model training is typically performed on tightly coupled clusters of GPUs that reside in the same data center, connected by ultra-high-bandwidth networks. This design is a direct response to the needs of training large neural networks.

Two standard techniques underpin almost every large-scale training run: data parallelism and model parallelism.

- In data parallelism, massive training datasets are divided across GPUs, each processing a slice in parallel to accelerate training.

- In model parallelism, the model itself is split across devices to work around memory limitations.

Both approaches demand constant, high-speed communication between GPUs.

Gradients must be synchronized, activations exchanged, and parameters updated in near real time. The faster GPUs can talk to each other, the more efficient the training.

After each training step, gradients must be synchronized across devices. Model shards must exchange activations or parameter updates, and the GPUs must communicate frequently and quickly. The rate at which data can be shuttled between GPUs—the interconnect bandwidth—becomes a critical factor.

That’s why these centralized clusters rely on specialized high-speed interconnects, such as NVIDIA’s NVLink or InfiniBand networking, which minimize latency and maximize throughput. This allows for faster training with the addition of more GPUs, up to a certain point.

The “Latency wall” is a well-understood concept in which communication delays become so prominent that adding incremental GPUs is no longer advantageous.

In centralized setups, GPUs are placed inches apart, linked by terabit pipes, and synchronized down to the millisecond. Despite all this, actual training efficiency still lags. In 2024, Meta reported only 38–41% utilization of their GPU capacity (MFU) while training LLaMA 3, highlighting how much time even tightly coupled systems spend waiting on communication.

FLOPs, or floating point operations per second, is the standard metric for measuring how fast a system can perform the core mathematical computations that power AI training. Training compute has increased each year as new frontier models are released, and is expected to rise further as new models are released in 2025 - the yellow circle below.

.avif)

However, even in highly optimized clusters, GPUs often achieve only 35-40% of their theoretical peak FLOPS when training large models. This efficiency is measured using Model FLOPs Utilization (MFU), a metric that assesses how effectively a model leverages the computational capabilities of the hardware.

While GPU chip performance has skyrocketed, improvements in networking, memory, and storage have lagged. The bottleneck isn’t the silicon, it’s everything around it: memory, networking, and coordination.

Centralized data centers mitigate this issue with their high-speed internal networks, enabling data to be transferred between GPUs far more efficiently and thereby saving on idle time and costs. That’s why OpenAI, Google, and others continue to build bigger and better-knit clusters: when a training run costs $50M+, every ounce of efficiency matters.

Now, consider what happens if you try to distribute that training process across the open internet.

Instead of microsecond-latency fiber links between GPUs, you’re working with broadband connections stretched across continents. Instead of identical, synchronized hardware, you’ve got a patchwork of consumer and enterprise GPUs with wildly different capabilities. The delicate timing that centralized training depends on simply falls apart.

This is the core technical wall: training workflows assume a centralized, synchronous world.

And when you try to stretch those assumptions across a decentralized network, everything breaks:

- Gradient updates become unreliable

- Node dropout and inconsistent bandwidth kill performance

- Synchronization overhead dominates

- And worst of all, existing deep learning frameworks have no native support for these decentralized realities.

Distributed training over the internet inherits the worst of both worlds: the complexity of centralized training and the unpredictability of open networks. No surprise, then, that until recently, this approach seemed infeasible.

The deep learning stack—frameworks like PyTorch, GPU architectures, memory hierarchies, and networking protocols—has been optimized for clusters, not chaos.

And yet, despite all of this, the wall is starting to crack.

Innovators are reimagining training algorithms from the ground up. They’re designing optimizers that tolerate delay and frameworks that survive node churn.

Decentralized training is no longer a moonshot. It’s becoming real.

4. The Breakthroughs Making Decentralized Training Viable

Over the past two years, a flurry of research and engineering breakthroughs has begun to redefine decentralized training. Academics and startups tackled the fundamental question head-on: How can we effectively train models while minimizing communication over slow, unreliable networks?

4.1 DiLoCo - Prime Intellect

One solution has been to reduce the frequency of synchronization. Google DeepMind introduced a method called Distributed Low-Communication (DiLoCo) in November 2023, and the startup, Prime Intellect, has recently scaled it.

Prime showed that you can have “islands” of GPUs perform 500 local training steps on their own before sharing updates, instead of syncing every single step. This resulted in a reduction in bandwidth requirements of up to 500x while still achieving the correct training result, and was successfully demonstrated on a 10 billion-parameter training run.

In May 2025, Prime Intellect unveiled INTELLECT-2, a 32B parameter language model trained through globally distributed reinforcement learning. Unlike traditional centralized training methods, INTELLECT-2 leverages a decentralized, asynchronous framework that enables a heterogeneous network of permissionless compute contributors to participate in the training process.

"Heterogeneous" refers to the ability to use a mix of different GPU models and compute setups (including commercial-grade and consumer-level hardware) rather than requiring standardized, enterprise-class infrastructure.

Their framework introduces three key components:

- PRIME-RL: An asynchronous RL system enabling training across volatile, heterogeneous nodes.

- TOPLOC: A lightweight verification system for untrusted inference workers.

- SHARDCAST: A protocol for efficiently distributing and syncing model weights across the network.

This approach not only democratizes access to AI development but also showcases the viability of decentralized training at scale, marking a significant shift in how large language models can be developed and deployed.

Prime Intellect provides a web dashboard for volunteers to participate, and they’ve partnered with groups like Hugging Face, Akash, and others to source compute resources for these runs.

While not heavily blockchain-focused (yet), they refer to their system as an “open protocol” and emphasize practical demonstrations, proving to the world that distributed training can hit new scale milestones outside of Big Tech’s walls.

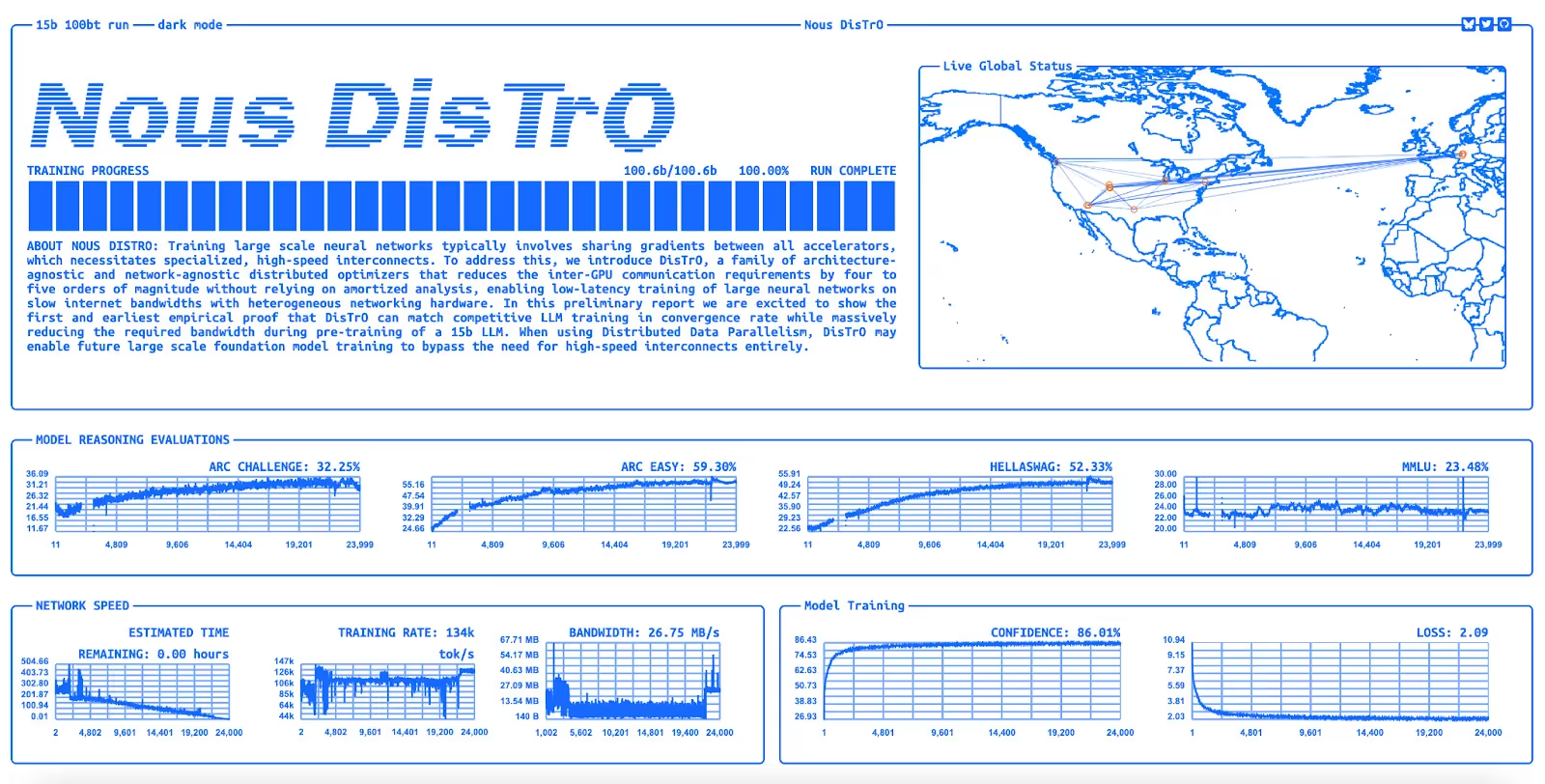

4.2 DisTrO - Nous Research

Similarly, Nous Research introduced an approach called DisTrO (Distributed Training Over the Internet), which allows each node to train independently and only periodically sync with others.

In 2024, DisTrO achieved 857x less bandwidth usage in experiments (training a 1.2B param model) with results comparable to conventional training. In effect, these techniques trade off more local computation for far less communication.

They prove that staleness (not syncing every microstep) can be managed in a way that doesn’t destroy the model’s convergence.

DisTrO is a family of novel optimizers that drastically reduce the communication overhead in data-parallel training across far-flung GPUs. In conventional data parallelism, each step requires an all-reduce sync of gradients, a deterrent over slow internet links.

DisTrO lets each node train mostly independently and only synchronize infrequently or in clever, lightweight ways. This slashes inter-GPU communication needs by three to four orders of magnitude, meaning only a small amount of bandwidth is sufficient to complete the task.

What does that enable? Essentially, thousands of volunteer GPUs worldwide can work together on one model.

Nous proved this out in late 2024, when they successfully ran a 15B parameter model across their decentralized network (dubbed Psyche) for 11,000 training steps. Remarkably, this globe-spanning swarm matched the results of a centralized run, despite only coordinating intermittently rather than at every step.

Now, in May 2025, Nous’ next run on Psyche will pretrain a 40B parameter model, which represents the largest pretraining run conducted over the internet to date.

4.3 Swarm Parallelism & Asynchronous Training - Pluralis Research

Swarm parallelism is an older (2023) decentralized training method designed explicitly for poorly connected, heterogeneous, and unreliable networks of devices.

Essentially, it is a fault-tolerant twist on model parallelism. Instead of the rigid, synchronous pipelines used in a data center, SWARM dynamically creates randomized pipelines between nodes and rebalances on the fly if some nodes lag or fail.

.avif)

SWARM has been recently iterated by Pluralis Research with the addition of asynchronous training.

In May 2025, Pluralis demonstrated that by allowing nodes to perform local weight updates asynchronously and only periodically synchronize, they could double the training speed of a SWARM pipeline.

The key is carefully mitigating the “stale gradient” problem, when different workers temporarily hold slightly different model versions. Pluralis introduced a novel tweak using Nesterov momentum to keep the asynchronous updates converging stably.

The result is an approach that allows heterogeneous GPUs to contribute to training with high utilization, even if some are slower. In their tests, the async method remains stable and converges where naive asynchronous training would diverge.

This forms the foundation of what Pluralis calls Protocol Learning: a model-parallel training system in which no single device ever holds the full model. Instead, fragments live across the network, enabling both privacy and collective ownership. The model “lives” inside the protocol, not on any one machine.

This paves the way to true collective ownership at the model layer by training foundation models that are split across geographically separated devices. It guarantees public access to strong base models and allows anyone to participate in the economic benefits of AI.

4.4 Verifiable Machine Learning - Gensyn

Another notable figurehead within decentralized training is Gensyn, a protocol for training and verifying machine learning models on a global network of idle or underutilized hardware.

By using cryptographic proofs to validate that work was done correctly, Gensyn makes it possible to trustfully outsource training jobs across an open market of compute providers, and pay the providers in GENSYN tokens. This approach unlocks massive, low-cost training capacity while preserving security.

A core innovation in Gensyn is its verification mechanism, which confirms that off-chain training was done accurately with minimal cost. Their protocol adds only about 46% overhead compared to standard training, a vast improvement over the 1,350% overhead incurred by natively re-running computations to check for accuracy.

Ultimately, Gensyn aims for a “train-to-earn” network: global GPU power utilized via blockchain, with training workloads verifiably farmed out and results aggregated on-chain.

Through innovations like DiLoCo, DisTrO, SWARM, asynchronous training, and verifiable ML, the once-daunting problems of latency, unreliable peers, and synchronization in a decentralized network are being addressed head-on.

Gradient exchanges that previously required data-center-grade networking can now be conducted over the public internet. And training that used to crash if one machine went offline can now reroute work on the fly.

With these breakthroughs, the decentralized training stack is proving itself, not just in theory, but in real-world runs of multi-billion parameter models.

The once-impossible notion of training a frontier-scale AI model in a decentralized way is rapidly becoming plausible, and one may even suggest that such decentralized networks could eventually out-compute the mightiest centralized cloud.

Pluralis Research, for example, argues that a truly globe-spanning training network might aggregate more GPU power than even the largest data centers, since physical facilities are limited by space, power, and cooling. In contrast, a distributed network can harness “all the computing power in the world” with no single point of constraint.

Each project—Prime, Nous, Pluralis, Gensyn—is building a critical piece of the decentralized AI stack. Together, they are laying the foundation for the Third Epoch of AI: an open, permissionless, and participatory future where anyone can help train the next generation of intelligence.

5. Coordination, Incentives, and the Blockchain Layer

Solving the technical piece is only half the equation. The other half is coordination—and that’s where crypto enters the picture.

In a world where thousands of independent participants contribute compute from around the globe, how do you ensure reliability, trust, and economic alignment?

How do you prevent freeloaders, verify honest contributions, and orchestrate model training without a central controller?

Here, the blockchain layer becomes essential. By pairing decentralized training protocols with tokens, smart contracts, and on-chain records, we begin to create an open marketplace for AI training.

Crypto has demonstrated that decentralized networks can achieve massive scale, and Bitcoin consumes ~150 TWh of electricity annually, which is double the energy used by even the largest AI clusters.

Bitcoin’s current energy consumption is ~100x the power required to run 100,000 of NVIDIA’s top H100 GPUs for a year.

The lesson: if behavior is correctly incentivized, resourcing will come. An AI training network with the correct incentive model could one day harness more compute than any single tech company, simply by activating the vast latent GPU power available worldwide.

In a crypto-native network, you assume participants might act in their own interest. Thus, the system must be robust against Byzantine behavior, and hence the need for economic incentives and verifiable compute.

The upside here is tremendous: permissionless access to AI development. Anyone with a gaming PC or a spare cloud instance can join a training run and be rewarded.

Cryptoeconomics coordinate this open party, keeping everyone honest and aligned, and blockchain provides the trust substrate for a globally distributed computer that trains AI models.

6. Conclusion

If the first epoch of AI was about access (using models via APIs), the second epoch was about ownership (downloading and fine-tuning open models). This third epoch is about participation: the democratization of the entire training stack.

This Third Epoch is fueled by:

- The collective compute of millions of idle GPUs,

- The collective intelligence of open-source researchers, and

- The collective coordination of cryptoeconomic systems that keep everyone aligned, honest, and incentivized.

The Third Epoch of AI won’t be powered by a single company or lab. It will be powered by networks. By protocols. By people.

This next chapter of AI won’t be written for us.

It will be written by us.