1. Introduction

Computer Use Agents (CUA) are the next evolution of AI beyond chatbots, capable of directly operating software on our behalf.

We’ve covered their basics in our previous post, “Computer Use Agents: Landscape, Training, Bottlenecks, and Pango”.

CUA’s combine vision models with language models to observe screens via screenshots, interpret on-screen text/UI elements via OCR, and execute step-by-step actions in a perceive-think-act loop.

Today, this once-niche field is heating up dramatically as a convergence of factors has accelerated progress:

- Major AI labs are all-in: OpenAI, Anthropic, and Google have each released agents that can control computers. OpenAI’s “Operator” books flights and fills forms; Claude’s “computer use mode” navigates a virtual desktop; and Google’s Mariner agent pilots Chrome. Together, they’ve kicked off a competitive race.

- Open-source breakthroughs: ByteDance’s UI-TARS 1.5, open-sourced in April 2025, previously lead on GUI benchmarks like OSWorld. Salesforce’s GTA1 outperforms OpenAI’s agent on key tasks, while InfantAgent-Next from the University of Minnesota beats Claude in many environments. Open-source tools are rapidly closing the gap.

- Agentic browsers & OS integration: Web and OS platforms are becoming agent-native. Opera’s new Neon browser features built-in AI for chat, autonomous actions, and content creation. Perplexity’s Comet does the same, automating tasks and interacting with web apps. Microsoft is embedding agents into Windows via Copilot, with tools like “Copilot Studio” for enterprise automation. Browsers and OSes are quickly becoming default platforms for CUAs.

- Infrastructure & data are emerging: Recognizing that current agents are limited by data, efforts like Chakra’s Pango network are tackling the training data bottleneck by crowdsourcing real user interactions at scale. Meanwhile, new agentic infrastructure, ranging from headless browser automation toolkits to vector databases for agent memory, is being developed as well. Models, data, eval environments, and integration frameworks are all coming together in 2025.

In the near term, CUAs will serve as copilots, assisting humans and saving countless labor hours. The race is on to build reliable digital “employees” that can handle complete workflow across apps.

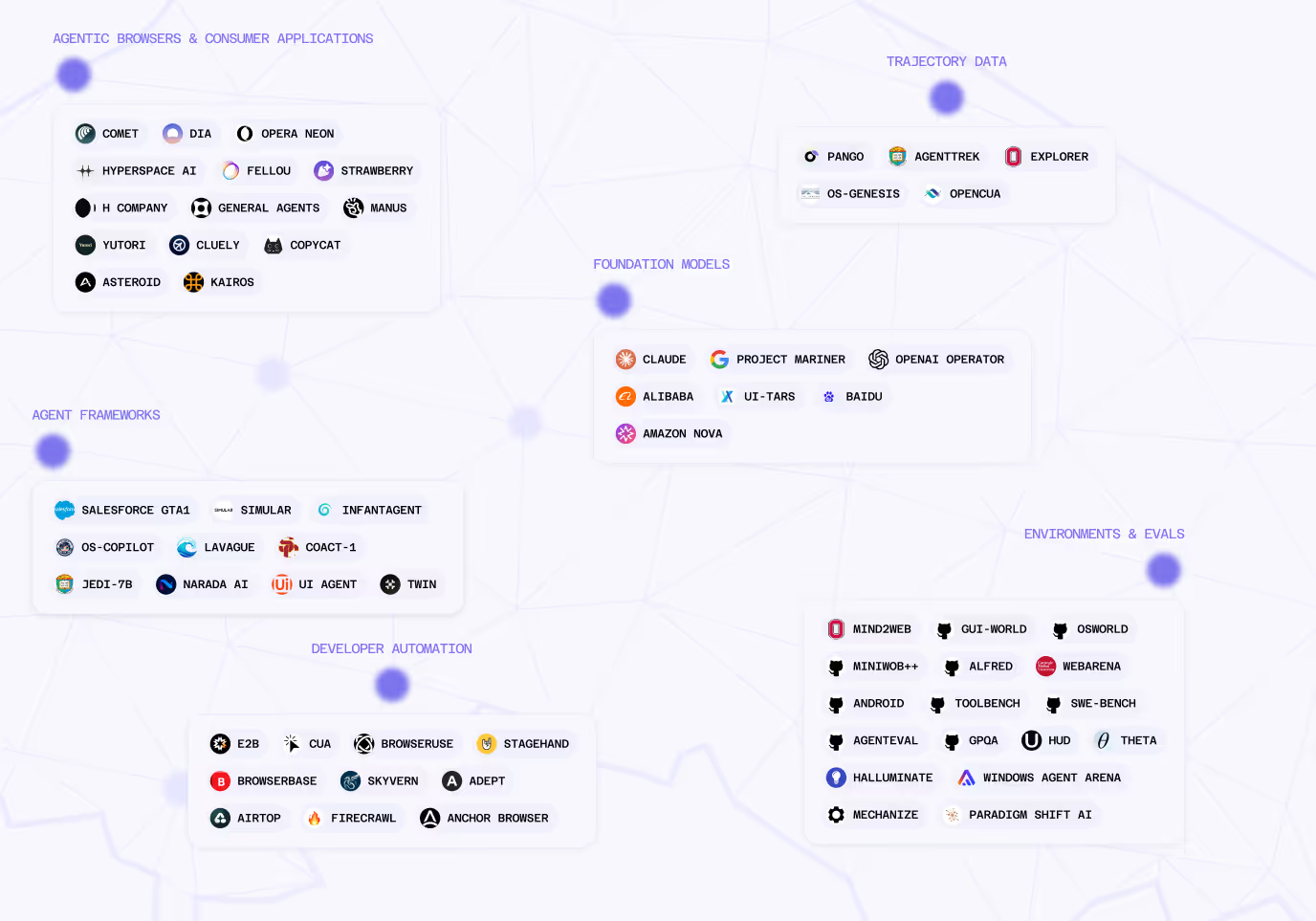

2. Market Map: Key Categories in the CUA Ecosystem

Today, the CUA landscape spans several overlapping categories.

2.1 Foundation Models with GUI Abilities

In our first CUA piece, we covered all the major foundation-model-based agents leading the space. That includes OpenAI’s Operator (now Agent), Anthropic’s Claude, and Google’s Mariner, each adapted to control GUIs through advanced multimodal models. We also explored ByteDance’s open-source UI-TARS 1.5, which quickly became a standout for both web and desktop automation, as well as efforts from Alibaba and Baidu, which are pushing forward Chinese-language CUAs.

Together, these agents set the foundational stage in our market map.

2.2 Agent Frameworks (Autonomous Agent Systems)

This category includes structured frameworks or architectures for building GUI agents, often modular systems that combine multiple models or planning modules.

GTA1 (GUI Test-time Scaling Agent):

Announced in mid-2025 by Salesforce AI (with academic partners), GTA1 is a high-performance agent framework that took first place on the OSWorld benchmark in the last two weeks.

GTA1’s novelty lies in its two-stage design: a separate planner model (e.g., an LLM like GPT-4 or Claude guiding high-level steps) and a grounding model (responsible for pinpointing the exact screen coordinates to click).

During task execution, GTA1 doesn’t commit to a single plan; instead, it samples multiple candidate action-sequences and evaluates them on the fly with another model acting as a “judge,” choosing the best option step-by-step. This clever test-time planning diversity makes it far more robust to errors (if one plan would have gotten stuck, an alternate path can be taken). It also uses reinforcement learning via GRPO to train the grounding model to click more accurately.

GTA1, paired with OpenAI’s “o3” model (the latest GPT-4 vision), achieved 45.2% success on OSWorld, a significant improvement from the ~12% that GPT-4 had achieved a year prior, and surpassing OpenAI’s own “Operator” agent. This is still well below the human 72%, but the progress is quick. GTA1’s approach of diverse planning and minimal reliance on brittle single-step reasoning is influencing other frameworks. It’s a research prototype for now (code is on GitHub), but we may see its ideas in production soon.

.avif)

InfantAgent & InfantAgent-Next:

InfantAgent-Next is a multimodal generalist agent that can interact with computers through text, images, audio, and video. It combines the strengths of “tool-based” agents (which use discrete tools/APIs for tasks) and “pure vision” agents (which rely only on screen pixels).

InfantAgent can deploy specialized sub-agents, such as a code-editor tool for coding tasks and a visual UI parser for click-intensive tasks, all coordinated through a central dialog memory. This hybrid design aims for both generality and efficiency. The agent uses direct GUI vision when needed, but also invokes high-level APIs when available (for example, utilizing a code compiler tool instead of attempting to write code via the GUI).

The framework is highly extensible: you can plug in your choice of LLM (they often use Claude-3.7 or GPT-4), your preferred vision model (UI-TARS for visual grounding), etc.

InfantAgent-Next outperformed **Anthropic’s Claude-based agent on OSWorld and can tackle not only GUI benchmarks like OSWorld but also more developer-oriented ones like SWE-Bench (software engineering tasks). The GitHub repo shows an active open-source project with a growing community.

For now, this is more of a research toolkit than a product, but it demonstrates the potential of modular agent architectures that mix and match multiple models and tools to handle a wide range of tasks.

.avif)

Simular’s S2 and Pro agents:

Simular is a startup (founded by ex-DeepMind researchers) building agent systems, notable for its focus on real-world enterprise workflows*.*

Their second-gen agent, Simular S2, is an agent framework that ranked #4 on OSWorld and #6 on the AndroidWorld benchmark as of July 2025. S2 employs a “mixture of experts” approach, utilizing different modules specialized for various contexts (web form filling, spreadsheet, terminal, etc.), and a meta-controller to route tasks. They reported ~34.5% success on very long 50-step OSWorld tasks, nearly on par with OpenAI’s scores.

Simular is coupling its tech with a business model: they offer Simular Pro, a $500/month per-seat “digital employee” for enterprises. It’s targeted at sectors like insurance and healthcare, where workers spend enormous amounts of time on form entries, data reconciliation, etc., often in legacy software with no APIs.

Simular Pro runs on a user’s Mac and can automate desktop tasks similarly to a human assistant. They emphasize features like data privacy (keeping sensitive information client side) and have built in domain-specific knowledge (for example, they train agents to handle specific insurance claims software UIs).

Their approach is notable for combining the success of research benchmarks with practical deployment.

.avif)

Agent frameworks are where we see architectural innovation: different ways to structure the problem of long-horizon planning and acting. They’re producing rapid gains on benchmarks and enabling specialized products (Simular), bridging between core models and end-user applications.

.avif)

2.3 Agentic Browsers and Consumer Applications

AI-powered browsers are web browsers with an agent “under the hood” to help the user. Rather than a separate AI app, the browser itself becomes the agent platform.

Perplexity’s Comet Browser:

Launched July 2025, Comet is a Chromium-based browser by Perplexity AI. It looks and feels like Chrome, but with AI woven throughout.

Comet’s key feature is a native agentic assistant that can summarize the page you’re on, answer questions with cited sources, and even perform actions in the page context. You can highlight text and ask Comet to draft an email reply, or let Comet click through a multi-step web form for you. Under the hood, it uses Perplexity’s LLM and their own retrieval system.

Perplexity’s Comet is an agentic browser built with AI at its core. It’s context-aware, able to act across tabs continuously, and integrates Perplexity’s search engine directly into the address bar to deliver sourced answers instead of links.

Early reception has been strong, and while still new, Comet signals how mainstream software may evolve to make agents a primary interface rather than an add-on.

.avif)

Browser Company’s “Dia”:

The Browser Company (makers of the Arc browser) introduced Dia around the same time as Comet.

Dia is another custom browser with an AI agent that can carry out tasks for you, like booking a restaurant reservation online end-to-end from a single natural language request.

The difference in Dia is a focus on UI design and minimalism. It tries to present the agent’s actions in an intuitive way, and allow easy corrections or interventions by the user. It’s also closely tied with Arc’s philosophy of browser workflows.

Dia is pursuing a seamless model of human–agent interaction within a familiar browser environment, positioning the browser as an active assistant rather than a passive window.

More broadly, Dia highlights that the evolution of agentic web interfaces will depend on designing effective, intuitive interactions between humans and agents.

.avif)

Opera Neon:

Opera’s entrant, Neon, is currently in closed alpha.

Neon builds on Opera’s earlier AI features (Opera was one of the first to integrate ChatGPT into a sidebar), but Neon goes further: it has modes like Chat, Do, and Make built into the UI.

“Chat” is the conversational Q&A mode (for searching, summarizing pages, etc.).

“Do” is the action agent. Neon can utilize a textual representation of websites to understand content and interact on your behalf, automating web tasks such as filling out forms or shopping. (Notably, Opera claims these actions are done locally in your browser for privacy.)

“Make” is a creative mode where Neon’s AI will build something for you. It can generate a mini website or code snippet, even if it has to spin up a cloud sandbox to do so (Neon can offload to a cloud instance that continues to run when your PC is offline).

Opera is essentially combining web automation, your intent, and content generation into one product. Opera even coined the term “Web 4.0 – the agentic web” for this emerging ecosystem where websites may eventually cater to both human and AI agent visitors. Neon emphasizes user control: e.g., it shows you each step the agent is taking and asks for confirmation on final submissions, reflecting how to balance autonomy with transparency.

.avif)

Consumer Interfaces (Manus & Ace):

Notably in the consumer landscape, Manus AI is a general AI agent that turns your thoughts into actions. It has the ability to plan and execute tasks end-to-end on behalf of the user. It recently rolled out a “Cloud Browser” that can sync your login state and carry out web tasks on your behalf after a one-time login. This lets the AI handle browsing chores with minimal intervention.

Similarly, General Agents’ platform introduced Ace, a computer autopilot that performs tasks on your desktop using the normal mouse-and-keyboard interface. The philosophy behind Ace is to seamlessly embed AI into existing user workflows. General Agents aims to bring artificial intelligence to where people already are instead of forcing anyone to learn new apps or interfaces.

Taken together, Manus and Ace demonstrate how AI agents are moving from research demos into real consumer-facing tools. By iterating on familiar software (browsers, desktops, etc.), these agents lower the barrier to mainstream adoption and quietly weave intelligent assistance into the fabric of everyday computing.

.avif)

.avif)

Agentic browsers and consumer applications aim to turn the web browser from a static tool into an intelligent assistant. They blur the line between “browser” and “agent platform.” This could accelerate adoption of CUAs because instead of finding and installing an AI agent, users might just get one built into software they already use (browser, email client, etc.). It’s reminiscent of how features like spellcheck or autofill became ubiquitous once baked into browsers. Soon, we may have “autoform-filling AI” and “AI reading mode” as standard.

Agentic browsers also illustrate the UX evolution: they need to earn trust, explain their actions, and execute gracefully. The consumer-facing layer of CUAs is emerging now - not just research demos, but tools we can try in daily life.

2.4 Dev Automation / Open-Source Toolkits

Alongside models and products, there’s a rich ecosystem of open-source toolkits that make it easier to build and experiment with GUI agents. These include browser automation wrappers, training environments, and developer SDKs. A few worth noting:

Browser-Use:

“Browser-Use” is an open-source library (14k+ stars on GitHub) that acts as a bridge between LLMs and a real web browser. It provides a high-level API where an AI agent can issue commands without dealing with raw pixels.

Underneath, Browser-Use uses Playwright (a headless browser) and some clever DOM serialization to translate those natural language-like instructions into browser actions. It gained fame because it enabled projects like Auto-GPT to control a browser. Think of it as making the web LLM-friendly: it converts web pages into a structured format (or uses Accessibility DOMs) so that a language model can interpret them more easily, and it provides safe execution of clicks and typing.

Many community agents (like “AgentGPT”) are built on Browser-Use. There’s also a whole ecosystem around it including a Web UI for Browser-Use that lets you run it with a Gradio interface in your own browser, and guides for integrating Browser-Use with various LLMs. If you want to prototype a web-browsing agent today using an API key for GPT-4, Browser-Use is one of your go-to tools. It abstracts a lot of the nasty low-level browser automation details.

.avif)

Stagehand:

Stagehand is another open-source framework (by BrowserBase) aimed at more robust browser automation with AI. It combines the strengths of traditional automation (Playwright code) with LLM flexibility.

Stagehand lets developers choose what to do with code vs. what to delegate to AI. For example, you might write deterministic Playwright scripts for known steps (like logging into a site with a username/password), but use Stagehand’s agent.execute("do XYZ…") for the fuzzy parts (like navigating an unfamiliar interface). It provides a few key abstractions: act() to let an AI take a single action, extract() to have the AI read data from the page into a structured schema, and an agent() which runs a full perceive/think/act loop in the browser.

Under the hood, it can plug into OpenAI’s “ChatGPT with browsing” models or Anthropic’s models, and it uses the BrowserBase cloud service to execute the actions reliably.

The team emphasizes reliability features like previewing the AI’s proposed actions before executing (so you can veto something dangerous) and caching of routine steps. Essentially, it’s trying to solve the “agents are unpredictable” issue by giving developers more control and hybridizing with classic automation.

Engineers can build AI-driven end-to-end web task flows without giving up determinism where it matters. Stagehand is relatively new but is gaining traction, particularly among startups that want to add an “auto-pilot” to their web apps.

.avif)

Others:

There are many other toolkits; a few examples: AutoGPT.js brings Auto-GPT-like loops to the browser entirely in JS; Playwright Guided is Microsoft’s attempt to incorporate an LLM to generate Playwright scripts from descriptions; Open-Operator is a template that uses Stagehand under the hood to recreate something like OpenAI’s Operator in your own project.

There’s also a push toward in-browser inference: projects like WebLLM let you run a smaller LLM purely in the browser via WebGPU (imagine a private local agent extension with no server).

Mozilla’s AI arm has built Wasm Agents, which are self-contained agents that can run securely in a browser tab as WebAssembly, interacting with the DOM. All these suggest that the barrier to entry for building a CUA has dropped significantly.

If you have an API key for an LLM and basic coding skills, you can spin up a prototype of an agent that logs into Twitter and posts a tweet, or scrapes some data and organizes it, within hours using these libraries. This open-source toolkit ecosystem is crucial, experimentation is not limited to Google and OpenAI. Anyone can try new ideas, which leads to a flood of creativity.

2.5 Data Infrastructure (for Training & Improving CUAs)

One of the biggest challenges for training high-performing GUI agents is the lack of large-scale, diverse interaction data (i.e. records of real humans doing tasks on computers).

Unlike fields like vision or NLP, we don’t have ImageNet-scale or WebText-scale datasets of people using software. To address this, new efforts have sprung up:

Pango (by Chakra):

Pango aims to break the data bottleneck holding back CUAs. Today’s top agents plateau at ~40% success, far below the ~70% humans achieve, largely because they’re trained on the same limited datasets.

Pango’s solution is to crowdsource real, diverse workflows: users install a Chrome extension, complete targeted “daily quests” like organizing files or building a spreadsheet, and get paid for their time. The extension logs screenshots, UI element data, and action sequences, focusing on areas such as multi-step workflows, error recovery, and cross-app tasks.

In practice today, many researchers have given up on building this SFT dataset as a consequence of how challenging it is to procure this type of data ethically and at scale. Instead, much of the research is focused on synthetic trajectories. Works like OS Genesis, Agent Trek, and Explorer all seek to build these trajectories datasets through introspection, and our belief is these works are long-term synergistic. Better human data drives better synthetic data generation which long term drives improved model outcomes.

3. Evaluation, Environments, and Benchmarks

Evaluating how “accurate” a CUA is can be tricky. These agents must handle open-ended tasks with many steps, where partial success or graceful failure is challenging to quantify. The community has developed an array of benchmarks and simulated environments to test agents in a standardized way.

Simulated GUI Environments

Since letting an AI agent roam freely on a real computer or the open web is both uncontrolled and potentially unsafe, researchers built simulators (contained environments with predefined tasks) to evaluate agents.

In our first CUA piece, we explored three of the largest datasets, benchmarks, and simulators in depth: Mind2Web, GUI-World, and OSWorld. We’re seeing an explosion in new evaluation frameworks as researchers continue to saturate the performance of existing benchmarks.

Challenges in Evaluation:

Despite progress, CUA evaluation still has major gaps. There’s no standardized “Agent IQ,” and teams report inconsistent metrics (success rate, reward score, human preference) making comparisons messy. Many benchmarks are also strict: if an agent completes 9 of 10 subtasks, it’s still marked a failure unless partial credit is built in. Researchers are now exploring more granular metrics like success at N steps or time-to-completion. Another challenge is generalization: agents often overfit to specific apps or sites, struggling when layouts change. This has shifted evaluations toward zero-shot setups, like Mind2Web, which tests agents on unseen websites. Finally, technical success isn’t the whole story. Agents can bumble through tasks inefficiently, eroding user trust. Measuring efficiency, fluidity, and usability remains an open frontier for evaluation.

We have a growing toolbox of benchmarks: from MiniWob++ for basic skills, to WebArena for web tasks, to OSWorld for full computer tasks, plus targeted sets like ToolBench for tool-use and SWE-Bench for coding, and even reasoning tests like GPQA.

These benchmarks have driven rapid progress by giving researchers clear goals (e.g. “get SOTA on OSWorld”). But they also highlight how far we have to go. The best agent still fails ~55-60% of OSWorld tasks and struggles with out of domain testing.

.avif)

4. Conclusion

Computer-use agents (CUAs) are rapidly maturing from flashy demos into practical digital coworkers, powered by advances in multimodal models, richer training pipelines, and growing demand to automate knowledge work.

In the past year, new datasets and stronger vision–language systems have pushed performance forward, and in some domains agents are nearing human efficiency. Still, challenges remain: today’s best agents complete only ~38% of tasks on comprehensive benchmarks versus ~72% for humans, with weaknesses in long-horizon reasoning, generalization, and error recovery. Most are also constrained by strict safety guardrails.

Encouragingly, these aren’t dead ends. Larger models, smarter memory systems, and more diverse training data are already driving rapid progress. Looking ahead, natural language could become the universal interface for software, with CUAs executing the clicks and keystrokes behind the scenes.

In the soon-to-be-released Part 3 of this series, we’ll dive deeper into the RL environments that are shaping how CUAs are trained and evaluated.

The future will depend on open data and collaboration, and Chakra’s Pango platform is here to crowdsource real workflows and fuel better training.

Together, we hope to move from software we use to agents we trust.